When building Computer Vision systems, the accuracy of object detection models hinges on the quality of data annotation. While axis-aligned bounding boxes have been the standard, oriented bounding boxes (OBB) are gaining traction due to their ability to capture object orientations more precisely. This blog explores what oriented bounding box annotation is, its advantages over regular bounding box annotation, the Machine Learning models that can predict oriented bounding boxes, and a glimpse into how Mindkosh offers powerful features to efficiently label oriented bounding boxes.

What is an Oriented bounding box?

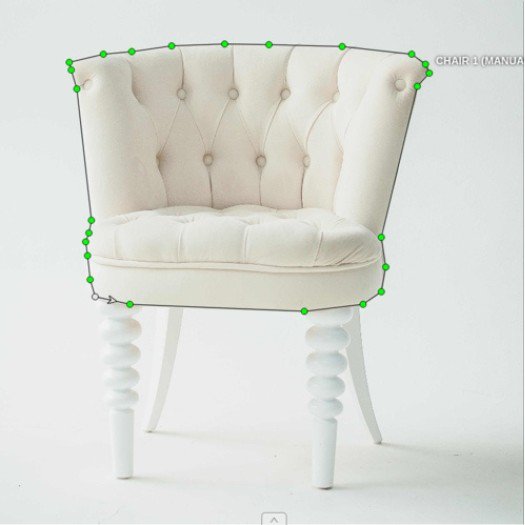

An Oriented bounding box (OBB) is a newer type of bounding box where the bounding box enclosing an object can be rotated to better fit the object's orientation. Thus, the enclosing box is not necessarily aligned with the image axes but is instead rotated to fit the object's orientation. Unlike axis-aligned bounding boxes that are aligned with the horizontal and vertical axes, OBBs capture the exact alignment of the object, providing a tighter and more accurate fit.

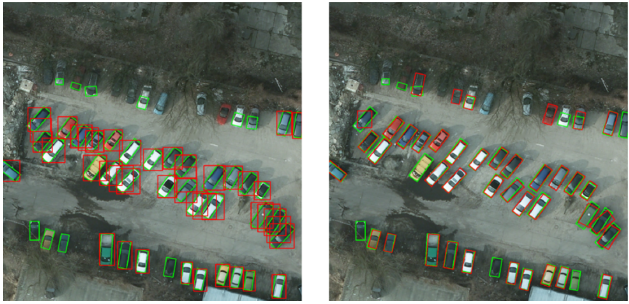

The need for oriented bounding boxes arose because in certain cases it isn't enough to identify objects with a rectangle. For e.g. satellite images usually contain a large number of smaller objects which can be oriented at any arbitrary in-plane rotation. Detecting these objects with regular, axis-aligned bounding box presents several issues, like - large overlap between different boxes, lots of background in bounding boxes etc.

Some key characteristics of oriented bounding boxes are:

- Rotational Fit: The bounding box is rotated to match the object's orientation, ensuring a snug fit.

- Five Parameters: OBBs are defined by five parameters — center coordinates (x, y), width, height, and the rotation angle (θ).

- Enhanced Precision: Captures objects' spatial orientation more precisely, especially for elongated and irregularly shaped objects.

Differences between axis-aligned bounding box and oriented bounding box

Oriented bounding boxes offer several advantages over traditional axis-aligned bounding boxes, especially in complex scenarios where objects are not axis-aligned. Here are the primary benefits:

Improved precision

- Tighter Fit: Oriented bounding boxes can snugly fit around objects regardless of their orientation, reducing the amount of background included within the box. This leads to more precise annotations.

- Reduced Overlap: In scenes with multiple objects, oriented bounding boxes can minimize the overlap between annotations, leading to clearer and more distinct object boundaries.

- Better for Irregular Shapes: Objects such as vehicles, text in natural scenes, and aerial imagery often do not align with the image axes. Oriented bounding boxes can handle these cases more effectively.

- Orientation Information: Provides valuable information about the orientation of objects, which can be crucial for tasks such as object tracking and scene understanding.

Robustness in Object Detection

- Rotated Objects: Oriented bounding boxes are particularly useful for detecting rotated objects in images, which is common in applications like aerial surveillance, robotics, and autonomous driving.

- Varied Object Poses: Can handle a wide range of object poses, making the annotation more versatile and robust.

Machine Learning models for oriented bounding box object detection

Several advanced Machine Learning models and techniques have been developed to predict oriented bounding boxes. These models enhance the precision of object detection by accurately capturing the orientation of objects. Here are some notable models:

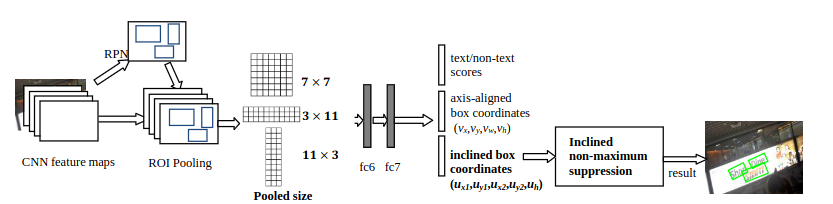

Rotated Region-based Convolutional Neural Network (R2CNN)

R2CNN extends the Faster R-CNN model to predict rotated bounding boxes by adding a rotated region proposal network (R-RPN) and a rotated RoI (Region of Interest) pooling layer. R2CNN demonstrates high accuracy in detecting rotated objects in images, making it suitable for applications like text detection in natural scenes and aerial imagery analysis.

Link to paper

Link to github

Oriented Bounding Box Regression (OBBR)

OBBR uses a regression-based approach to directly predict the parameters of oriented bounding boxes, including the center coordinates, width, height, and rotation angle. One key advantage with OBBR is that it can be integrated with various backbone networks like ResNet and VGG, providing flexibility in choosing the feature extraction network.

Link to paper

Link to github

YOLO-v5

YOLO-v5 extends the popular YOLO model to predict rotated bounding boxes by modifying the loss function and network architecture to handle orientation. It combines the speed of YOLO with the ability to detect rotated objects, making it ideal for applications requiring real-time processing.

Link to github

Rotated Single Shot Multibox Detector (R-SSD)

A modified version of the popular Single Shot Detection model for detecting oriented bounding boxes. It extends the SSD model by predicting additional parameters for the orientation angle. It is notable that it maintains the efficiency of the original SSD model while improving performance on rotated objects, making it suitable for real-time applications.

Calculating oriented bounding box IoU

Intersection over Union (IoU) is a widely used evaluation metric in computer vision, specially for tasks involving object detection and segmentation. IoU measures the accuracy of predicted bounding boxes or masks in identifying the location and extent of objects within an image.

IoU can be defined as the ratio of the intersection area to the union area of the predicted and ground truth bounding boxes or masks. Mathematically, it is expressed as:

IoU=Area of Union / Area of Intersection

where

- Area of Intersection is the overlapping area between the predicted bounding box and the ground truth bounding box.

- Area of Union is the total area covered by both the predicted and ground truth bounding boxes.

Unlike axis-aligned bounding boxes, Oriented bounding boxes can be rotated, which makes the calculation a little more complex. To calculate IoU for oriented bounding boxes, use the following method:

- Find the final co-ordinates of the rotated bounding boxes.

- Treat the rotated bounding boxes as polygons.

- Find the intersecting and total area covered by the two polygons using a library such as shapely.

- Use the same IoU formula as for axis-aligned bounding boxes.

You can read more about calculating IoU for oriented bounding boxes here. The article also provides sample python code to calculate the IoU for OBBs.

Technical Considerations and Challenges

Annotation Complexity

- Manual Effort: Annotating oriented bounding boxes manually can be more time-consuming and complex compared to regular bounding boxes, especially for large datasets.

- Tooling: Requires specialized annotation tools that support rotation and angle specification. See next section for more details on this.

Model Training

- Choice of ML model: There are a handful of ML models available today that have the appropriate architecture to handle the additional complexity of predicting angles. Choosing the right model is crucial to get accurate results.

- Data Augmentation: Effective data augmentation techniques, such as random rotations and perspective transformations, may be required to train models that are robust to variations in object orientation.

Inference speed

Predicting rotated bounding boxes can introduce additional computational overhead, impacting the inference speed of the model. Optimizing the model architecture and implementation is essential for maintaining real-time performance.

Oriented bounding box annotation tools

Annotating images with oriented bounding boxes requires specialized tools that can handle the added dimension. At the very least a good annotation tool should allow you to create rotated bounding boxes. In addition the tool should help you:

- Manage large datasets.

- Resolve mistakes in a collaborative manner.

- Setup the annotation task with different quality checking workflows.

- Show detailed status of the annotation project.

There are only a handful of labeling tools that allow you to draw oriented bounding boxes. Some of them are listed below:

The Mindkosh annotation platform offers two tools to create oriented bounding boxes.

2-point method

With this method you can first create an axis-aligned bounding box by clicking on the top-left and bottom-right corners of your desired rectangle. Once a bounding box has been created, hover over it to display a rotating pivot icon. Click over it and use your mouse to rotate the bounding box. By holding shift and moving your mouse you can also snap the rotation to 5° increments.

Extreme points method

We recommend using this method to easily draw a rotated rectangle in one go. Simply click on the 4 extreme ends of the object, and an oriented bounding box will be created for you. This can accelerate the box creation process considerably, while ensuring a tight fit around the object.

You can learn more about the Mindkosh platform here. You can also sign up for free here and try the platform yourself.

Oriented bounding boxes offer significant advantages compared to axis-aligned bounding boxes, providing improved accuracy and precision. By capturing the orientation and exact shape of objects, OBBs enhance the performance of machine learning models in various applications, from autonomous driving to aerial image analysis. However they do present new challenges like lower inference speed and annotation complexity. As new ML models offering better performance become available, oriented bounding boxes are expected to see a rise in their adoption across a variety of Computer Vision tasks.