Reducing QC time for labeling large multi-modal datasets for Autonomous vehicles

See how this startup in the Autonomous delivery space used Mindkosh to drastically reduce the time spent on Quality Checking and boost the annotation quality of both point clouds and images

Challenge

The startup was facing a huge challenges annotating large volumes of multi-modal sensor data to create a dataset that can capture the nuances of different driving scenarios. In addition, Quality checking the labeled data across different sensors was very time consuming with traditional labeling methods. The team required a solution that could seamlessly integrate multiple sensor data types to enable consistent object tracking across sensors as well as time.

Solution

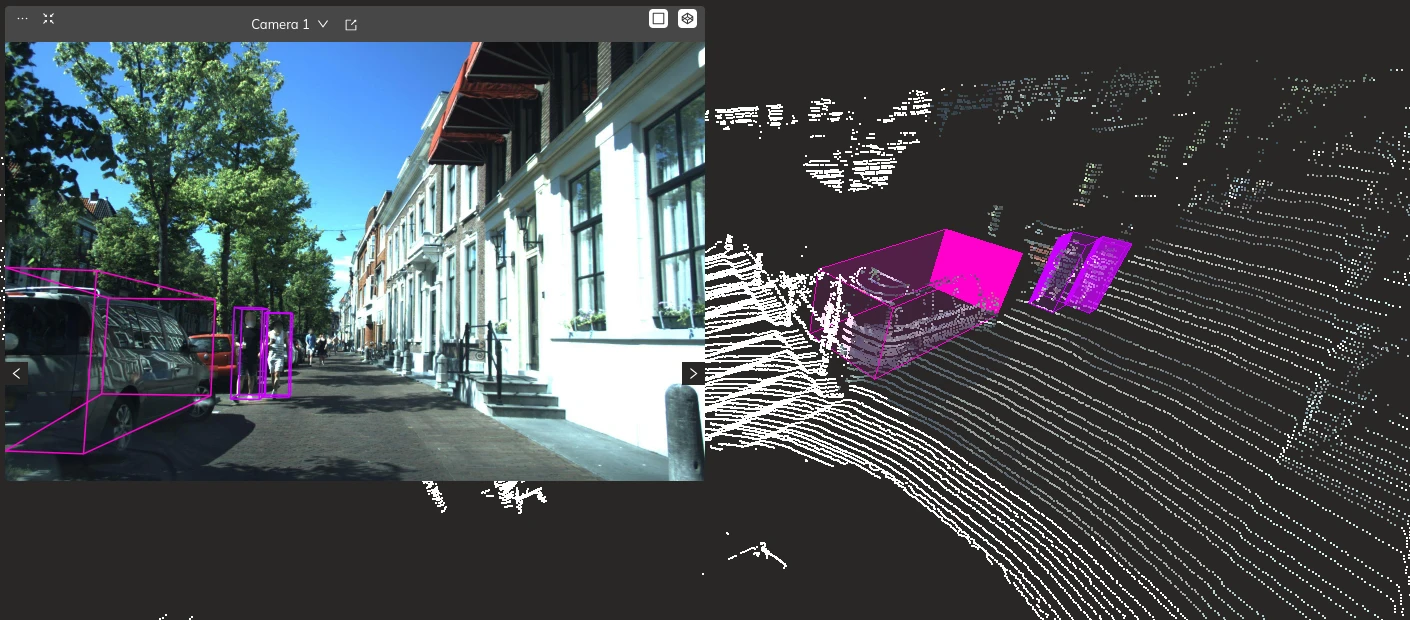

The team set up a workflow to ingest synchronized Lidar and camera data, as well as pose information into the Mindkosh platform. This helped them to easily spot static and dynamic objects in the scene. Our team also used camera images to color the point clouds, making it easier for their labeling team to recognize objects. Then the team used a combination of Honeypot and spot-checking to calculate the quality of labels and set up a 3-level QC workflow.

Outcome

This resulted in a significant reduction in the time spent on Quality Checking, while also boosting the label quality of both point clouds and images. Maintaining consistency of labels across sensors was earlier a huge challenge, but with the advanced annotation tools on Mindkosh, the resulting labels were much more consistent throughout the dataset. This boost in quality resulted in better object detection models making autonomous vehicles safer for everyone.