Understanding multi-sensor data labeling requirements

As Machine Learning models evolve to process the world with higher accuracy and depth, they increasingly rely on multi-sensor data from RGB cameras and LiDAR sensors to depth maps, stereo vision, infrared, and even Radar. This fusion of different modalities powers a wide range of advanced applications:

- Autonomous vehicles, where LiDAR and cameras must work in sync

- Industrial robotics, navigating through space with depth and object awareness

- Aerial drones, combining RGB + depth for geospatial mapping

- Medical imaging, using multiple scans (e.g., MRI + ultrasound)

But while the sensing hardware is becoming more sophisticated, labeling this data correctly is still one of the biggest bottlenecks in building production-grade AI systems.

What is multi-sensor data labeling?

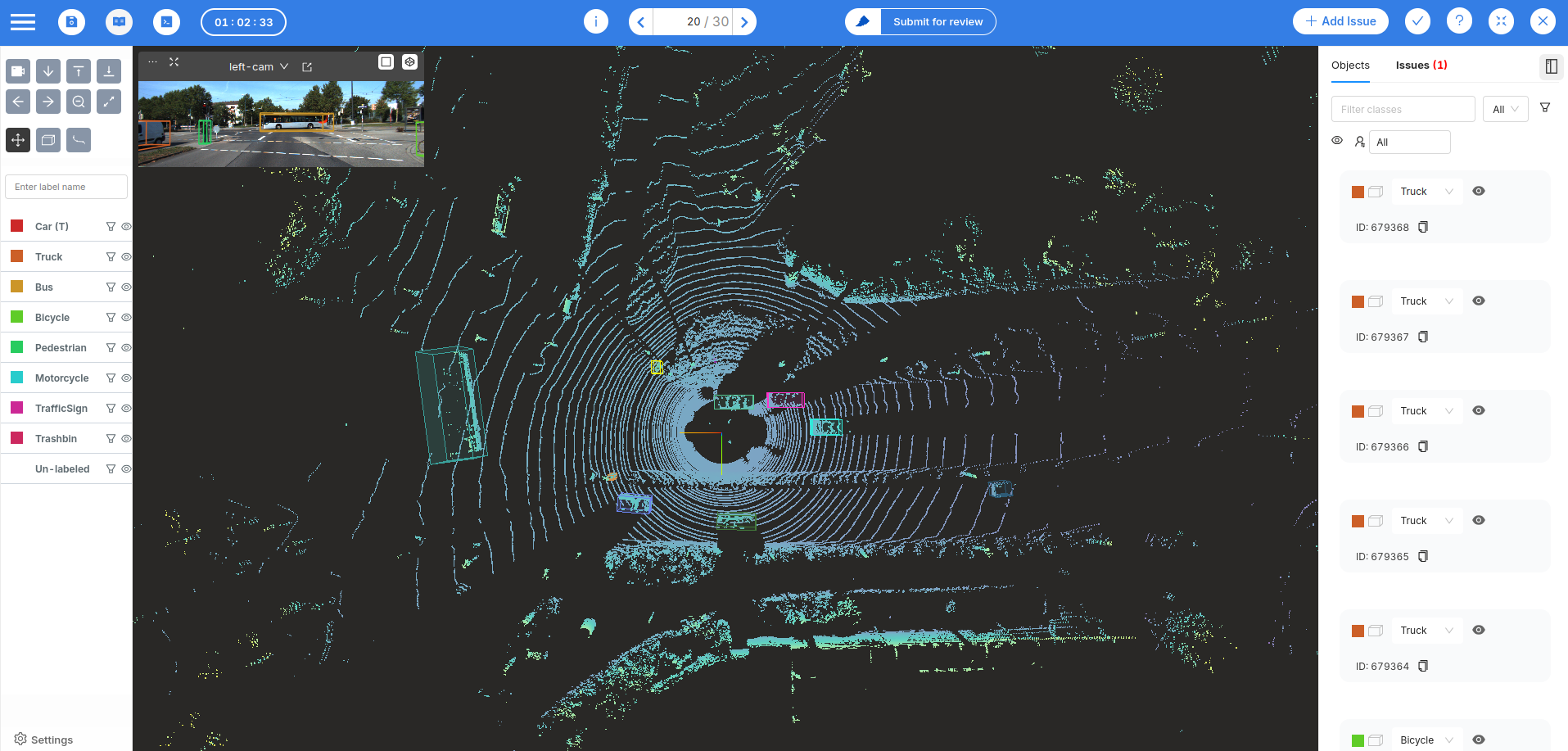

Multi-sensor data labeling refers to the process of annotating data across multiple aligned sensor streams such as labeling objects in RGB images and matching them with 3D bounding boxes in LiDAR point clouds, or tracking keypoints across both depth maps and video.

This labeling done one synchronized data streams and often requires cross-reference across modalities. For example, a car detected in a LiDAR frame needs to be matched in the camera feed and tracked across time.

Key challenges in annotating multi-modal data

- Sensor alignment and synchronization

Misaligned timestamps or different sensor frequencies can throw off annotations. Good tools must help sync and visualize fused data effortlessly. - Complex geometry and annotation types

Annotating 3D bounding boxes, polylines for lanes, and depth-aware segmentation is far more difficult than drawing 2D boxes. - Scalability across frames and sensors

A single perception sequence may contain thousands of frames from 3+ sensors. Tools must support interpolation, bulk editing, and automated pre-labeling. - Maintaining annotation consistency

Annotators need ways to see how an object looks across views (top-down LiDAR, side-view RGB, etc.) — and maintain consistency across frames and sequences. - Workflow management and quality control

Labeling at scale requires more than tools — it demands review systems, role management, audit logs, and QA pipelines to ensure accuracy.

What the industry needs from sensor fusion annotation

Modern AI workflows — especially in AV, robotics, and Geospatial demand platforms that offer:

- Native support for multi-sensor fusion and calibration

- Rich annotation types (3D boxes, masks, polylines, keypoints)

- Sensor-aware timelines and playback

- Interpolation and automation for faster throughput

- Custom workflows, flexible QA, and real-time feedback loops

A true multi-sensor labeling solution must go beyond the basics - it must be a production-grade engine for building robust perception datasets.

Encord

What Encord offers?

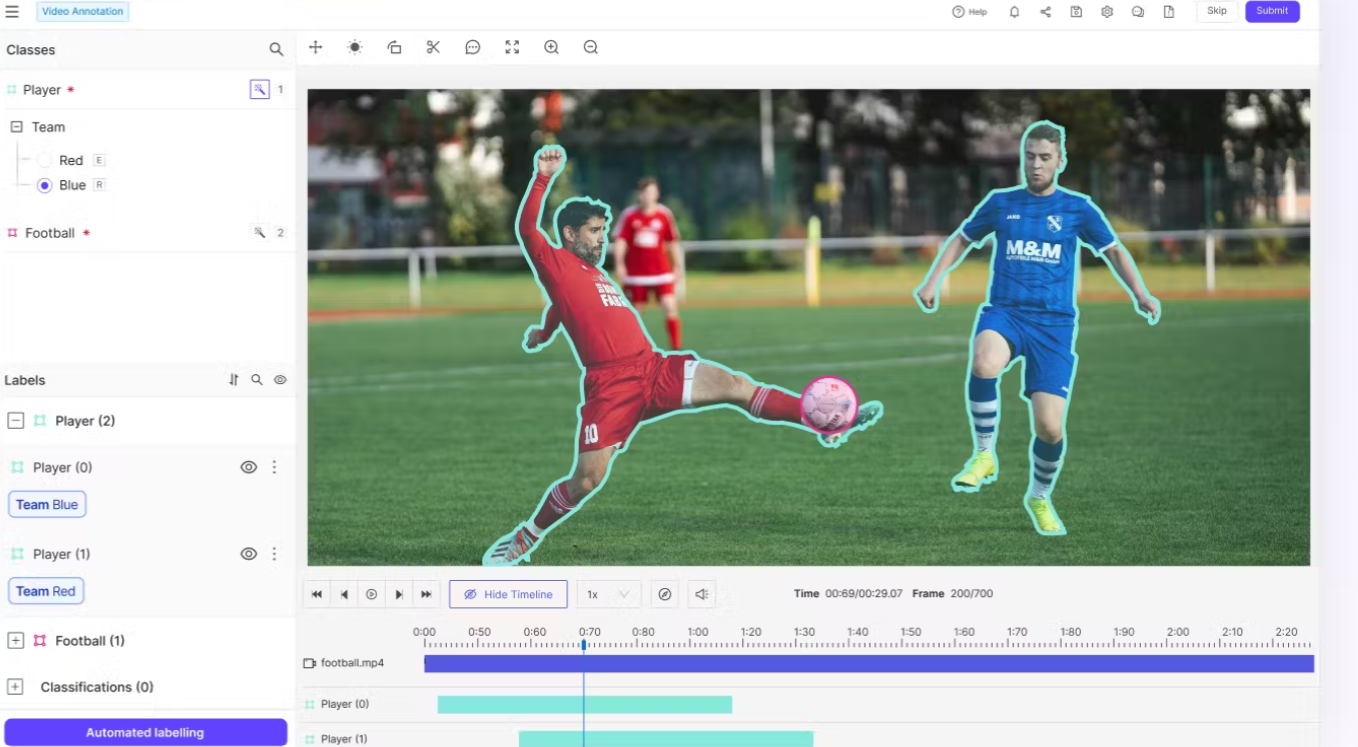

Encord is known for its strengths in 2D annotation, particularly in medical imaging and video segmentation. It supports collaborative workflows, customizable ontologies, and automatic annotation tools, making it suitable for early-stage or research-heavy teams.

Why teams outgrow Encord?

As teams scale up or transition to real-world multi-sensor pipelines, Encord often falls short in some critical areas:

- No support for LiDAR point cloud annotation.

- No support for Sensor fusion annotation.

- No support for single channel data, like thermal and depth.

- No support for multiple cameras to be labeled together.

- Review systems not built for high-throughput 3D workflows.

While Encord performs well in controlled 2D/medical settings, it begins to crack under the complexity of high-scale, multi-sensor tasks — where sensor sync, performance, annotation speed, and automation matter deeply.

Why users look elsewhere?

This gap has opened the door for next-gen platforms like Mindkosh which bring:

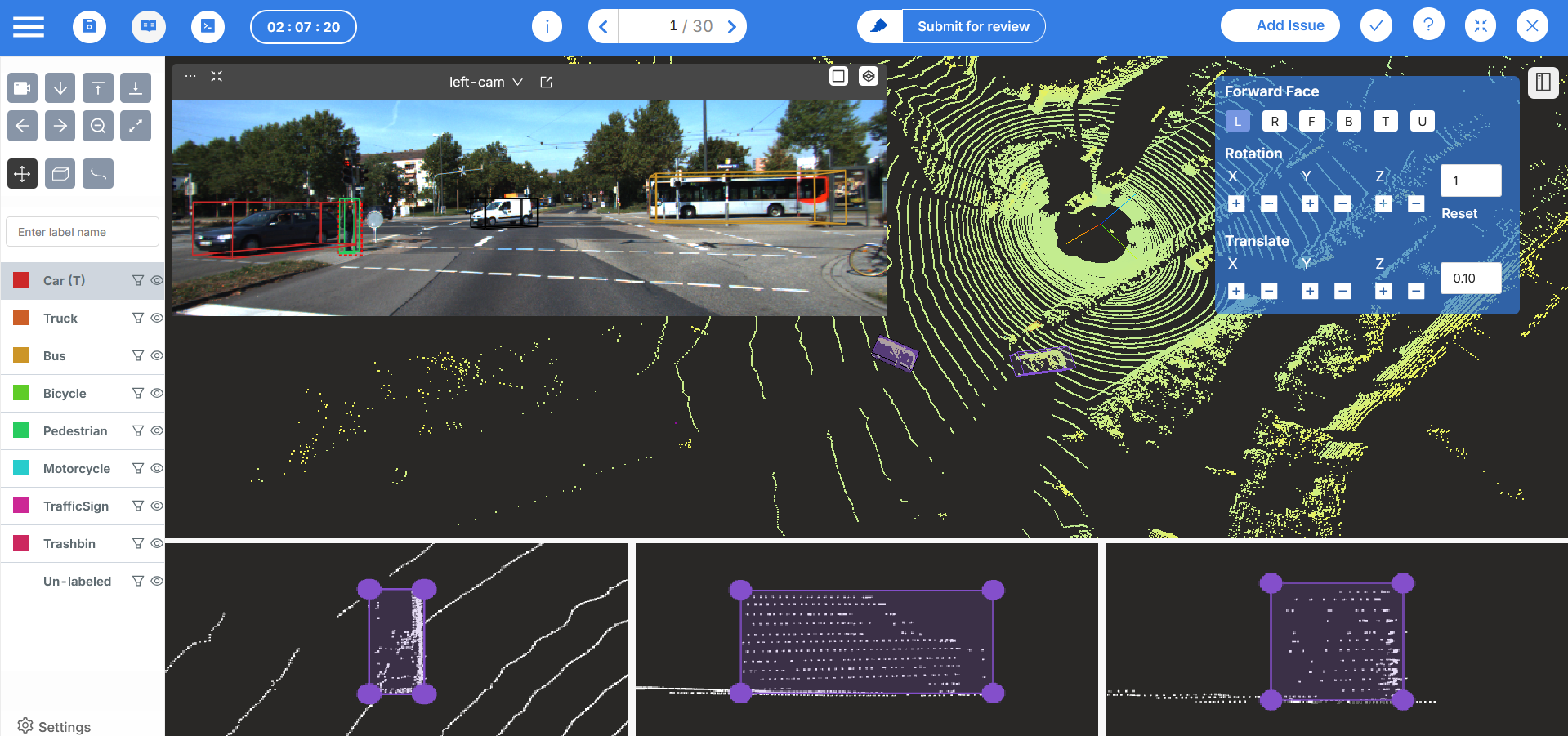

- Powerful LiDAR annotation with automatic projection of annotations over related camera images.

- Rich geometry support, including 3D cuboids, 3D polylines and 3D panoptic segmentation.

- Support for multiple context images when labeling a RGB image.

- Support for single channel images like Thermal and Depth.

- ML-powered annotation tools to automate time-consuming tasks like segmentation.

These solutions are tailored for real-world scale and complexity — not just datasets in a lab.

Mindkosh - Purpose - built for Sensor Fusion

Best for: Use cases across industries like Autonomous vehicles, Drones, Robotics & Industrial Automation.

Why teams choose Mindkosh over Encord:

Mindkosh offers native support for LiDAR + RGB image fusion, built-in single channel image support, comprehensive annotation tools, interpolation and much more. In contrast, Encord offers only basic support for depth data and lacks tools for 3D lidar data or robust sensor fusion, which can be deal-breakers for teams working on autonomous driving or robotics.

Automation is another area where Mindkosh leads, offering 1-click auto-labeling for point clouds, interactive segmentation and automatic pre-labeling for images whereas Encord currently doesn’t offer similar capabilities.

On top of this, Mindkosh allows users to setup custom annotation workflows tailored to their projects, while Encord’s customization often demands backend integration. Finally, Mindkosh provides transparent, scalable pricing, whereas Encord’s total cost of ownership tends to be higher — especially as project complexity grows.

Other notable features include

- Locking object dimensions across frames.

- Creating Lidar segmentation objects from cuboids.

- Support for large, dense, RGB point clouds.

- Label templates per class and sensor

- Multi-annotator setup, Honeypot for QA and a mature Issue management system.

Explore Multi-sensor labeling with Mindkosh.

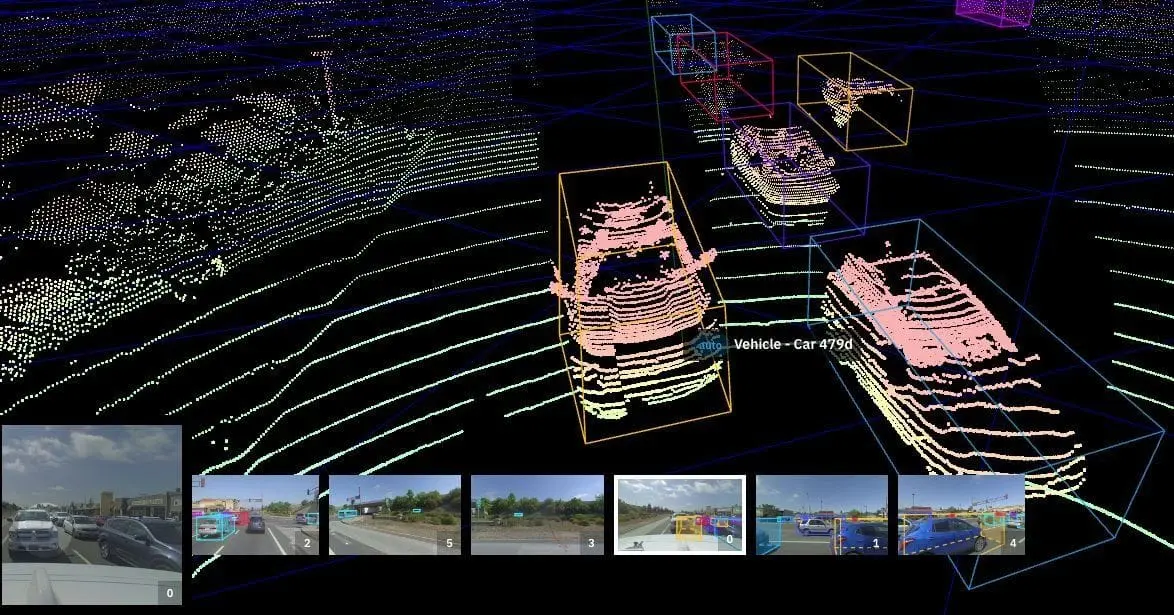

Scale AI – Enterprise-grade AV platform

Scale AI has positioned itself as a go-to platform for managed services, especially for enterprise and government projects. Known for Image, Lidar and Video annotation capabilities, the platform integrates Machine Learning into its QA workflows, ensuring high annotation accuracy at scale.

However, Scale AI is expensive and geared toward enterprise use, making it less ideal for smaller teams or those looking for a self-serve platform. Most workflows are designed for outsourced labeling, which can limit flexibility. In recent years, Scale has shifted focus toward GenAI and AI agent tooling, creating uncertainty for AV-focused clients. Concerns have also been raised around data privacy, particularly given its heavy involvement in sensitive datasets.

Best for: Non-sensitive datasets and government projects

- Good LiDAR + image annotation support.

- Strong ML-integrated QA.

- Expensive and enterprise-focused.

- Platform better suited for teams outsourcing labeling.

- Lots of uncertainty with the investment from Meta, and concerns about data privacy.

- Focus has shifted to GenAI tools in recent times.

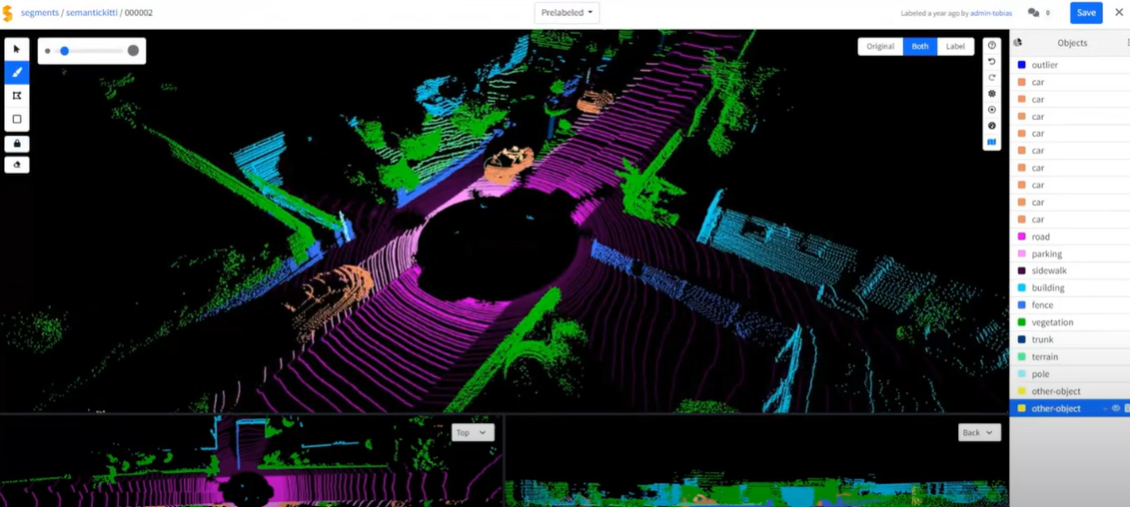

Segments.ai - 3D-first multi-sensor labeling for robotics & AV

Segments.ai is built around LiDAR–camera workflows and sequential 3D datasets. Teams can label multiple sensors at the same time, maintain consistent track IDs across time and modalities, and project cuboids from 3D to 2D in one click. The platform offers 3D-centric tools and interpolation, and a strong Python SDK + webhooks for integrating with active-learning loops. It’s self-serve friendly with clear docs, making it a good fit from PoC to production for robotics and AV teams.

Best for : Internal ML teams in robotics/AV running LiDAR–camera fusion and sequential 3D data.

Where it falls short : Segments.ai falls a little short when it comes to image labeling - it has limited annotation tools available to label camera images. In addition, while its good for Cuboid labeling in point clouds, its support for point cloud segmentation is limited. In addition, it requires long commitments and does not offer flexible subscription options.

- 3D point-cloud tooling (Batch/Merged modes) for moving & stationary objects

- 1-click 3D→2D projection and fused 2D/3D viewport for context

- Consistent track IDs across modalities and over time

- Automatic interpolation & object tracking.

- Weak at point cloud segmentation and image labeling.

- No support for video annotation either.

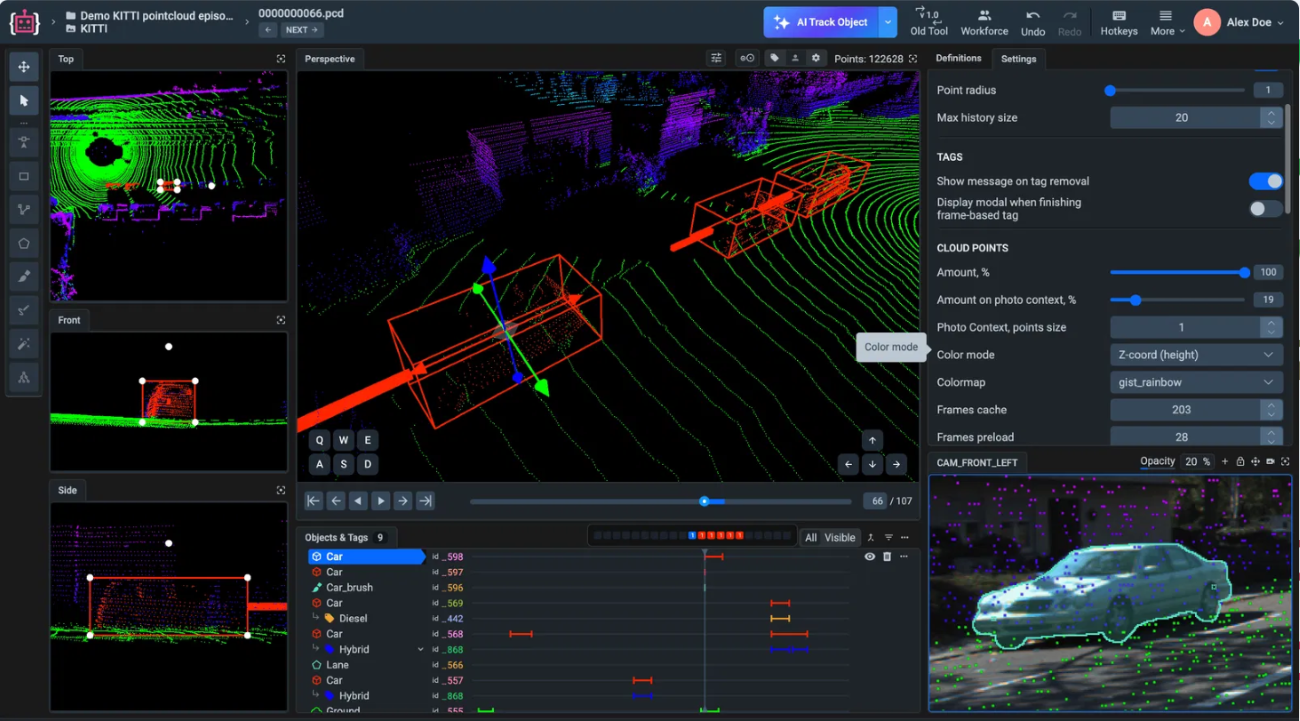

Supervisely – Open and extensible

Supervisely offers a plugin-based annotation and Computer Vision platform that is especially attractive to research labs and internal labeling teams. Its strength lies in flexibility — teams can build and customize their own tooling layers to fit specific project needs. The extensibility allows for integration with a variety of AI/ML pipelines, making it a favorite for teams with in-house engineering talent.

However, Supervisely’s out-of-the-box project management capabilities are limited, which can create friction for production-scale deployments. For teams willing to invest in building around it, the payoff is a highly adaptable and cost-effective solution. Also, the flexibility comes at a cost - the UI is not easy to navigate, and doing simple tasks can sometimes come across as difficult and time consuming.

Supervisely supports Lidar labeling with support for reference images, however it does not support Sensor fusion, and has a limited set of Lidar labeling tools. It also struggles when dealing with large point clouds or long sequences of lidar scans. So while it can be a good option for individual labelers or research labs working with small datasets, it might not be suitable for large scale production use cases.

Best for: Research labs, internal tools teams

- Extensible with plugin-based extensibility.

- Great if you want to build your own tooling layer.

- Weak out-of-the-box project management.

- No support for Sensor fusion.

- Weak Lidar labeling tooling.

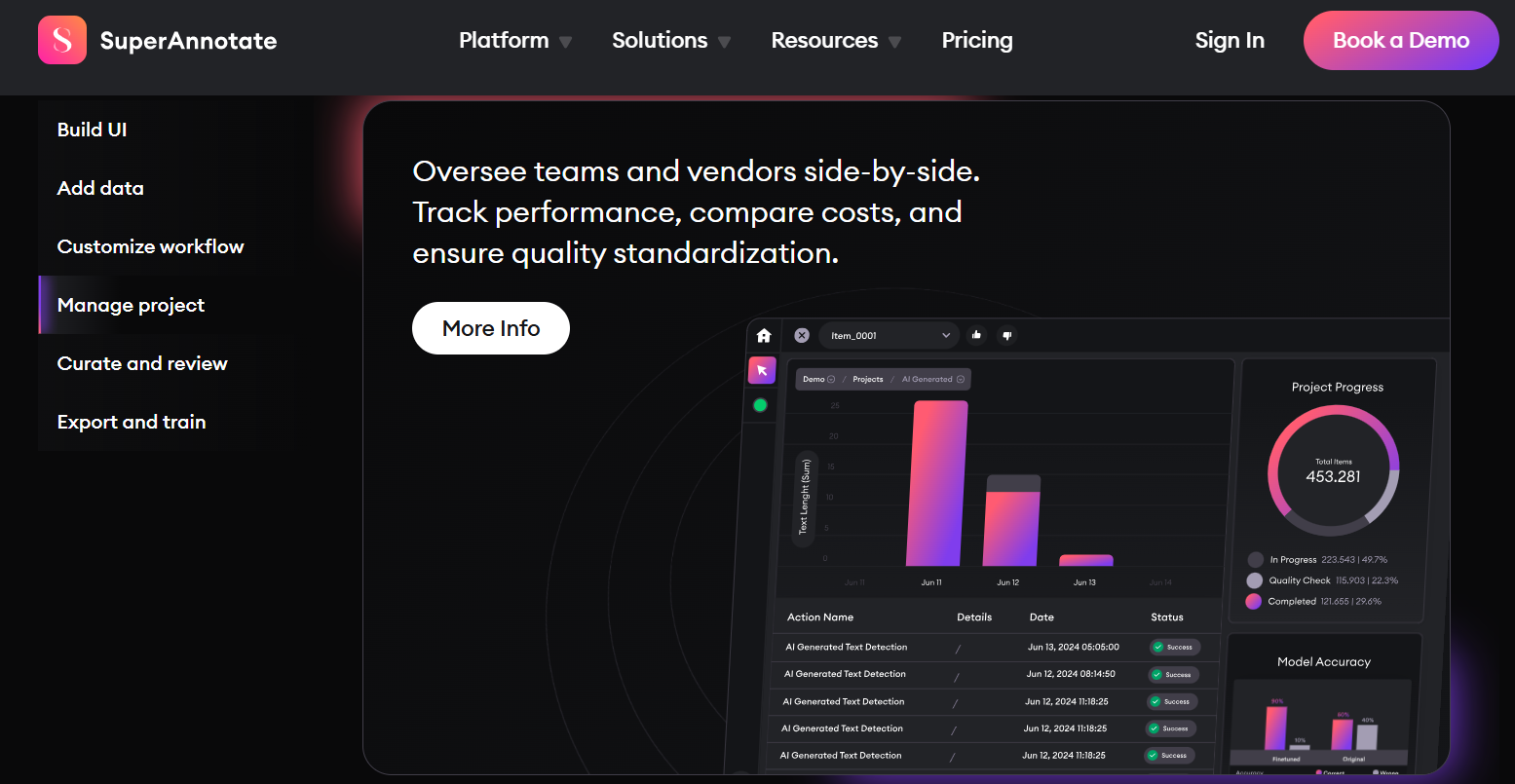

SuperAnnotate - Robust platform with collaboration features

SuperAnnotate markets itself as an AI data platform designed to streamline annotation workflows across industries, with a heavy emphasis on collaboration and speed. The platform supports Image and Video with plugins enabling additional capabilities. Its clean UI/UX and task distribution features make it easy for cross-functional teams to work together efficiently, while model-assisted labeling speeds up project turnaround.

While it lacks the deep LiDAR and sensor fusion capabilities of Mindkosh or Scale AI, it shines in collaborative QA, workload management, and integration into existing AI stacks. Security and compliance are highlighted as enterprise-grade, making it appealing to teams that handle sensitive data. While Super annotate is a well-known labeling tool, it lacks support for Lidar and multi-sensor labeling, making it unsuitable for use-cases that involve Point clouds or multi sensor labeling.

Best for: Teams that prioritize speed and cross-functional collaboration

- Good UI/UX and annotation speed.

- Offers model-assisted labeling.

- Robust platform used by a lot of teams.

- Great option for 2D image labeling.

Parting thoughts

Multi-sensor data annotation is too complex to be handled by generic tools. While Encord is a great option for some medical and 2D workflows, it lacks the robustness required for 3D-heavy or real-time sensor fusion use cases.

If you're building the next generation of perception systems — for autonomous driving, robotics, or drone-based analytics — platforms like Mindkosh are purpose-built to handle that complexity. If you would like to see it in action, just let us know - we'd be happy to help!