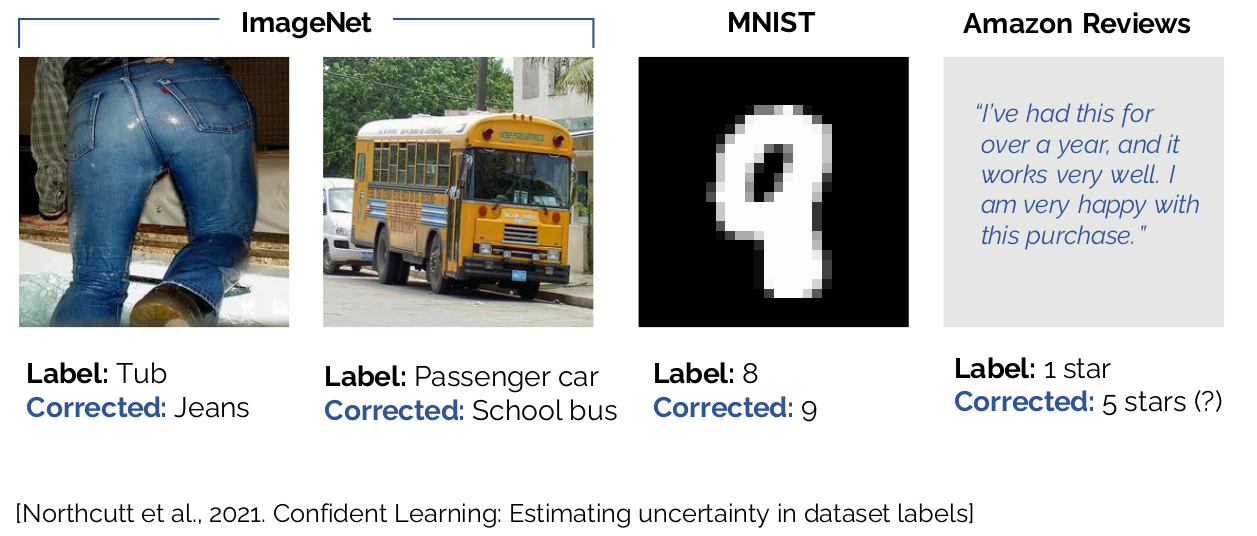

Data annotation is a crucial process that forms the backbone of training data for Machine Learning (ML) and Artificial Intelligence (AI) systems. High-quality labels provide the foundation for models to learn effectively, achieve better performance, and produce reliable results. However, the process of annotating data is prone to challenges that can compromise label quality. It is well documented that even the most popular and widely used public dataset have a high error rate. In this blog, we’ll explore why high-quality labels are important, how to measure label quality using common techniques, and tips for maintaining high-quality data annotation.

Why are high quality labels important?

Data annotation involves labeling raw data (such as images, videos, text, and audio) to train AI and ML models. High-quality labels are essential for the following reasons:

A. Model Accuracy and Performance

- Improved Accuracy: Accurate and precise labels enable models to learn from reliable data, leading to higher accuracy in predictions and classifications.

- Better Generalization: When trained on high-quality data, models can generalize well to new, unseen data, improving their performance across various tasks.

B. Minimizing Bias and Errors

- Reducing Bias: Poor-quality labels can introduce bias into the data, which may lead to biased and unfair model predictions. High-quality annotation helps ensure balanced representation.

- Error Prevention: Incorrect labels can lead to models learning erroneous patterns, resulting in poor performance and incorrect predictions.

C. Compliance and Ethical Concerns

- Regulatory Compliance: Industries such as healthcare and finance require compliance with strict regulations, which necessitates high-quality labels to ensure data accuracy and safety.

- Ethical AI: High-quality labels help maintain ethical AI practices, ensuring the model operates fairly and ethically, particularly in sensitive applications.

How to measure the quality of labeled datasets ?

Being able to accurately measure the quality of labeled datasets is essential to ensuring high quality annotations. Here are some of the most widely used techniques for measuring the quality of labels.

A. Inter-Annotator Agreement (IAA)

- Cohen's Kappa: Measures agreement between annotators beyond chance. Values close to 1 indicate high agreement, while values close to 0 indicate low agreement.

- Fleiss' Kappa: An extension of Cohen's Kappa for measuring agreement among multiple annotators. Useful for tasks involving more than two annotators.

- Krippendorff's Alpha: A robust metric for measuring agreement among annotators, suitable for various types of data and scales.

B. Gold Standard Testing

- Validation with Gold Standard Data: Comparing annotated data against a set of gold standard data that has been verified by domain experts provides an objective measure of label quality.

- Discrepancy Analysis: Identifying and analyzing discrepancies between annotations and gold standard data helps pinpoint areas for improvement.

C. Review and Audit Processes

- Random Sampling: Reviewing a random sample of annotated data can reveal inconsistencies and errors in the labeling process.

- Double Annotation: Assigning the same data to multiple annotators and comparing their labels can highlight areas of disagreement and potential issues.

Tips on maintaining high quality data annotation

Maintaining high-quality data annotation is a multifaceted process that requires careful planning, management, and monitoring. Here are some tips to achieve and sustain high-quality labels:

A. Clear Guidelines and Instructions

- Standardized Annotation Guidelines: Create clear, comprehensive guidelines that outline the expected labeling standards and provide examples for reference.

- Training for Annotators: Train annotators thoroughly on the guidelines and provide continuous feedback to improve their accuracy and consistency.

B. Quality Control and Monitoring

- Continuous Monitoring: Monitor annotators' performance regularly to identify trends, patterns, and potential issues in labeling quality.

- Feedback and Retraining: Provide annotators with feedback and retraining when discrepancies or low-quality annotations are identified.

C. Annotator Selection and Management

- Experienced Annotators: Hire and retain experienced annotators who understand the domain and task-specific requirements.

- Diverse Annotator Pool: Use a diverse pool of annotators to minimize potential biases and ensure a broader perspective in labeling.

D. Iterative Review and Improvement

- Review and Refine: Regularly review annotated data and refine the guidelines and instructions based on feedback and audit results.

- Learn from Mistakes: Identify common errors and address them through additional training or revisions to the annotation process.

E. Technology and Tools

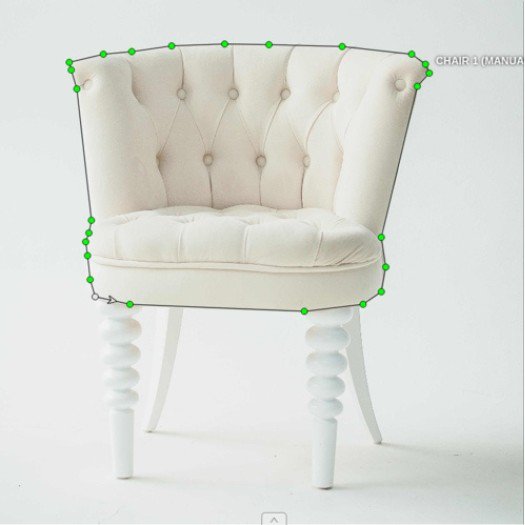

- Annotation Tools: Use advanced annotation tools that provide features such as automated suggestions, easy error correction, and real-time collaboration.

- Automated Quality Checks: Implement automated quality checks within annotation tools to flag potential errors or inconsistencies.

F. Incentivize Quality

- Performance Incentives: Offer incentives or bonuses based on the quality of annotations to motivate annotators to maintain high standards.

- Recognition and Rewards: Recognize and reward annotators who consistently produce high-quality work.

G. Data Privacy and Security

- Ensure Data Privacy: Protecting the privacy and security of data being annotated is crucial, particularly in sensitive domains such as healthcare.

- Compliance with Regulations: Adhere to data protection regulations to maintain trust and integrity in the annotation process.

Conclusion

Maintaining and assuring high-quality data annotation is essential for developing robust, reliable, and accurate AI and ML models. By understanding the importance of high-quality labels, using common techniques to measure label quality, and following best practices for maintaining quality, organizations can enhance the performance and reliability of their AI and ML systems. Continuous improvement and adaptation of the annotation process, along with the use of advanced tools and technologies, will contribute to sustained high-quality data annotation.