"80% of Machine Learning is simply data cleaning" is a commonly used phrase in the AI/ML community. Data quality plays a crucial role in Machine Learning (ML) models’ performance. It can be the difference between the success and failure of an AI/ML project.

While ML models may perform well in the training environment, most of them under-perform massively in the real-world. To mitigate the problem, datasets need to be carefully curated and labeled so that the they cover a variety of scenarios and the labels are consistent and accurate.

But how do we accurately measure the Quality of the training data? In fact how do we even define a proper Quality measure in this scenario?

Label consistency

Accuracy measures how close the data labels are to ground-truth or how well the labeled features in the data match real-world conditions. While its important that your your data is accurate, its also important that the labels are consistent throughout the dataset. Inconsistent labeling is one of the most common reasons behind under-performing ML models.

Label consistency quantifies how consistently the labels are applied to the training data. For example, if two annotators label the same data point, their labels should be the same

In order to avoid inconsistency among labels and to make sure all stakeholders are on the same page on the actual requirements of a project, it is important to properly write an "Annotation Guide". This document precisely defines the labels involved in the project and provides instructions on how to deal with confusing scenarios.

Measuring label consistency

Consistency can be measured using a variety of methods, such as Consensus and Cohen's Kappa coefficient.

Consensus

Consensus measures the percentage of agreement between multiple annotators. The idea is to find a label for each item that most agrees with all labelers. An auditor typically arbitrates any disagreement amongst the overlapped judgments. Consensus can be performed by assigning a certain number of reviewers per data point. It can also be performed by assigning a portion of the whole dataset to more than 1 labelers.

To calculate a consensus score, divide the total number of agreeing labels by the total number of labels per data-point.

consensus = labels-in-agreement/total-number-of-labels

Cohen's Kappa coefficient

Cohen's kappa coefficient (κ), is another statistic used to measure inter-rater reliability. It is generally considered a more robust measure than simple percent agreement calculation, as it takes into account the possibility of the agreement occurring by chance.

The kappa coefficient is calculated as follows:

κ = (po - pe) / (1 - pe)

Here,

pois the observed proportion of agreement between the raters.peis the expected proportion of agreement that would be expected by chance.

The kappa coefficient can range from -1 to 1, with a value of 1 indicating perfect agreement and a value of 0 indicating no agreement beyond chance. A negative value of kappa indicates that the raters are disagreeing more often than would be expected by chance. Here is more detailed interpretation of different values of the co-efficient.

κ < 0- Less agreement than would occur by chance0.00 <= κ <= 0.20- Slight agreement0.21 <= κ <= 0.40- Fair agreement0.41 <= κ <= 0.60- Moderate agreement0.61 <= κ <= 0.80- Substantial agreement0.81 <= κ <= 1.00- Almost perfect agreement

Cronbach's alpha

Cronbach’s alpha is a measure of internal consistency, that is, how closely related a set of items are as a group. It can be calculated by comparing the variance of the individual items in a scale to the variance of the scale as a whole.

A high Cronbach's alpha value indicates that the labels are consistent with each other, and that the training data is likely to be of high quality. A low Cronbach's alpha value indicates that the labels may be inconsistent, and that the training data may be of lower quality. In detail, it can be interpreted as follows:

α > 0.9- Excellent reliability0.8 <=α<= 0.9- Good reliability0.7 <=α<= 0.8- Acceptable reliabilityα > 0.7- Poor reliability

Label accuracy

Label accuracy is an important measure of training data quality. It measures how close the labels in the training data are to the ground truth. While measuring label accuracy when training Machine Learning models is relatively straight-forward, doing so for annotated data is tricky because of the lack of existing ground truth data.

However, there are a few different accuracy measures that can be used to keep track of the quality of data. The following work the best for a variety of use-cases.

Accuracy Measures for training data

Honey Pot

If you have some portion of the data already labeled, and you believe it conforms to your data quality standards, honey pot can be a good way to measure the average quality of the dataset.

To setup a Honeypot, include the already labeled data with the data you send for annotation, without alerting the labelers about which data-points are already labeled. You then match the new labels with the ones you already have to arrive at an approximate measure of the data. Of all the measures, this is the easiest to setup, and can be done completely on your end rather than the labelers' end.

Spot Review

This method is based on the review of label accuracy by a domain expert. Usually review is done by randomly checking a small portion of the total data for any mislabels. Two simple methods can then be used to measure the quality of the training data.

- Asset level accuracy measure - Mark an asset(image, audio file etc.) inaccurate if there is any inaccurate label in that asset. Then you can simply divide the total number of accurate assets by the total number of assets to get a coarse measure of accuracy.

- Label level accuracy measure - A more fine-grained measure of accuracy. You can calculate this measure by dividing the total number of accurate labels by the total number of labels in the dataset.

Qualitative measure of training data quality

The qualitative measures defined above describe how accurate and consistent your labels are across the training dataset. However to make sure that the data is sufficient to develop an impactful Machine Learning model, there are a few other considerations that should be kept in mind.

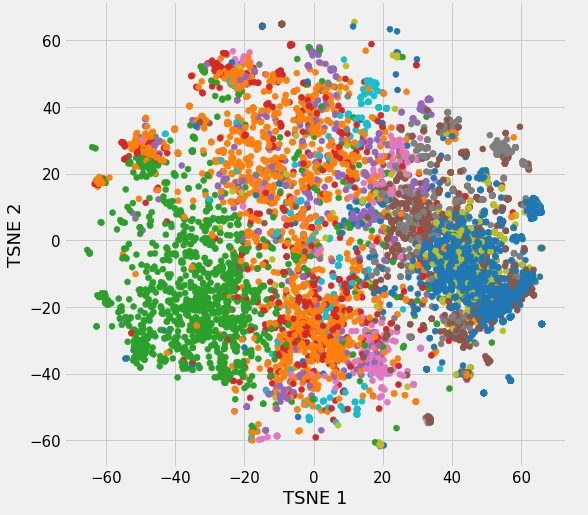

Completeness

Completeness indicates how complete the training data is. A complete training data set should contain all of the possible scenarios the ML model will likely encounter in production. Completeness can be roughly measured by examining the distribution of the data and identifying any missing or underrepresented groups.

Diversity

Diversity quantifies how diverse the training data is. A diverse training data set should contain a variety of different examples of the problem that the ML model is trying to solve.

Qualilty Assurance

In order to keep track of the quality of your data in a systematic way, it is important that proper Quality Assurance processes are set in place with your Data Annotation vendor or internal team. You should always inquire about how quality is measured and what happens if the measured quality does not match an agreed upon threshold.

To ensure consistency, here at Mindkosh, we always make sure that the annotation guide is properly detailed by going through a mock labeling session on a sample of data. The idea is to catch these issues early in the project's lifecycle, which can save a lot of time later on. We also make sure there are open lines of communication between all stakeholders, so that any doubts/problems can be immediately resolved.

In addition, to ensure high label accuracy, a typical workflow involves each data point going through at-least two human annotators - first for labeling, and then for review. Subsequently, a small portion (usually 5%) of the data is subjected to a Quality check by a domain expert who establishes the quality of the batch of data.

Interested in getting your data labeled with the highest quality? Get in touch with us today!

Or you can also checkout this free cost estimator tool to estimate your data labeling costs.