Self-driving cars are no longer science fiction — they're here, quietly making deliveries, mapping city blocks, and navigating test tracks. But the real magic behind autonomous vehicles (AVs) isn’t in the sleek design or even the advanced sensors. It's in the data — specifically, how that data is labeled.

Every time an AV recognizes a traffic light, yields to a pedestrian, or merges into traffic, it does so because it has learned how to interpret its environment. And that learning happens through data — mountains of it — that have been meticulously labeled to tell the machine what's what.

Imagine teaching a child how to cross a street. You’d point out cars, bicycles, stop signs, and tell them what to do when a light turns red. Autonomous vehicles undergo a similar process, but instead of words, they learn from labeled images, videos, and 3D point clouds.

Here’s why this matters: a mislabeled object can mean the difference between a smooth ride and a serious accident. For instance, if a system fails to distinguish between a stroller and a plastic bag in the street, the consequences could be catastrophic. That’s how high the stakes are.

In the world of AVs, data labeling isn't a behind-the-scenes task — it's the foundation of safety, performance, and trust.

Key challenges when labelling data for autonomous driving

While the goal is clear — annotate the world so machines can understand it — the path is riddled with complexity. From ambiguous objects to unexpected edge cases, labeling AV data is as challenging as it is crucial.

Ambiguous scenarios & human oversight

The real world is messy. It doesn't always present objects in neat, clear-cut ways.

Think about street signs attached to poles. Should the annotator label the sign, the pole, or both? What if there are two signs on one pole — one temporary, one permanent? Inconsistent answers can confuse the model during training.

Now imagine this: An autonomous vehicle is driving through a neighborhood construction zone. A traffic cone is mislabeled as a pedestrian. The car, prioritizing safety, suddenly slams on the brakes. This could cause a rear-end collision or panic in traffic.

Such scenarios show how critical human oversight is, especially when AI can’t confidently resolve uncertainty.

Ambiguity in data requires more than technical precision; it demands contextual judgment, which humans are still best equipped to provide — at least for now.

On Mindkosh a powerful issue tracking dashboard helps annotators flag such edge cases, ensuring reviewers can intervene before these ambiguities affect training.

Edge case complexity

Autonomous systems need to be ready for the 1-in-a-million event — because on the road, even rare events are life-and-death matters.

Edge cases include:

- Vehicles/pedestrians drawn over other vehicles.

- Trucks carrying cars/motorbikes.

- Snow covering road markings

- A fallen tree blocking part of a lane

- A dog crossing the road during heavy rain

- Children playing near parked cars

These situations are not only rare, but also incredibly varied in appearance. They challenge even the most advanced perception systems.

Unfortunately, such events are often underrepresented in datasets. And even when they appear, annotators might not be sure how to label them properly.

That’s why specialized annotation protocols and expert review mechanisms are vital. Without them, models trained on ideal conditions will falter in the real world.

Annotation techniques used in AV development

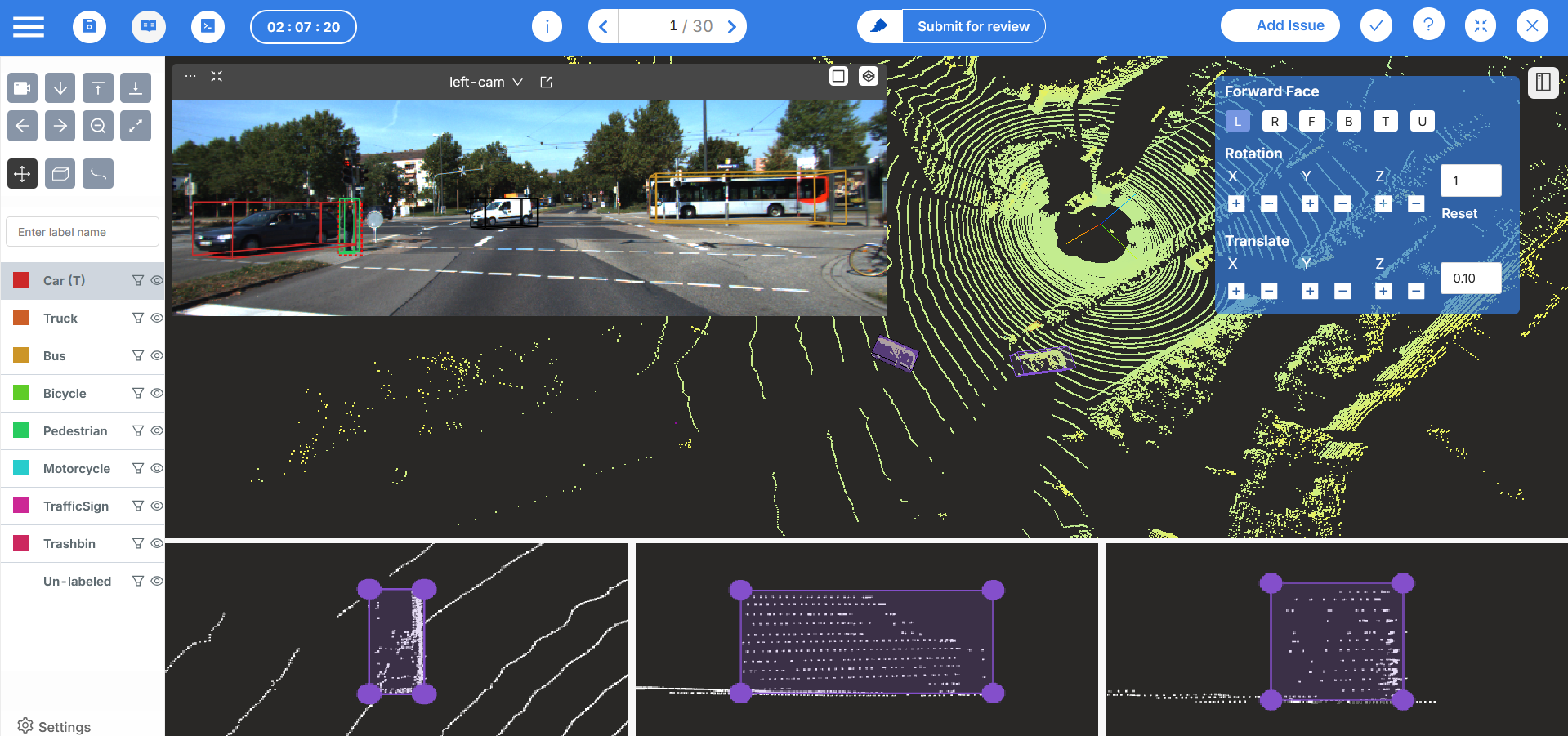

AVs rely on multiple sensors — cameras, radar, LiDAR, GPS — producing a variety of data formats. To teach AVs to interpret this data, different annotation techniques are used, each serving a specific purpose.

Bounding box annotation

This is the most basic — and one of the most widely used — techniques. Annotators draw rectangles around objects such as:

- Vehicles

- Pedestrians

- Bicycles

- Traffic signs

Bounding boxes are relatively quick to draw and effective for object detection models. When combined with tracking, the same object can be followed across frames in a video sequence, improving both temporal understanding and reducing redundant labeling effort.

Interpolation algorithms can further enhance efficiency by filling in boxes between keyframes — saving time and improving consistency.

Semantic and instance segmentation

Bounding boxes are helpful, but they have limitations. They don't capture the precise shape or boundaries of an object. That’s where segmentation comes in.

- Semantic Segmentation labels each pixel with a class (e.g., road, vehicle, sidewalk). This provides a more granular understanding of the environment.

- Instance Segmentation takes it a step further by distinguishing between individual instances of the same object class (e.g., two different pedestrians).

This is critical in complex urban environments where objects overlap or occlude each other. For AVs, knowing the exact shape of a pedestrian — and where they are in relation to a vehicle — can influence braking decisions, trajectory planning, and collision avoidance.

Polyline annotation

Polylines are used to annotate continuous objects, such as:

- Lane lines

- Road edges

- Divider markings

- Sidewalk boundaries

AVs use these polylines to understand:

- Where they can legally drive

- How to stay in the correct lane

- Where turns are allowed

Without accurately labeled lane markings, even the best-trained model might drift or fail to change lanes appropriately.

Annotation tools & automation: What enables scale?

If AVs are the cars of the future, then annotation tools are the engines that power them.

Labeling a few hundred images manually is manageable. But training a production-ready AV model requires millions of labeled data points — and not just images, but multi-sensor datasets - 3D point clouds, thermal images, depth images etc.

This requires annotation at scale — and with it, automation and smart tooling.

Here is what to look for, when choosing an annotation tool to label data for AV/robotics use-cases.

- Ability to handle multiple sensors like Lidar, images, single channel images etc.

- Workflow customizability so you can setup custom workflows to ensure Quality annotations.

- Automatic annotation tools - especially for image segmentation and point cloud annotation.

- Team management capabilities.

- Powerful analytics to quickly understand bottlenecks.

- Ability to handle large number of users and a large volume of data.

Investing in the right annotation platform is incredibly important - it is impossible to label large volumes of data with high quality without the right tool. Especially for AV use-cases where annotation quality matter a lot, and sensor stacks can be complicated, annotation tools need to b chosen carefully.

Mindkosh covers all the basic tools (and many more) you need for annotating AV datasets. It supports multiple sensors like Lidars, Radars, depth images and thermal images. And has automatic annotation tools that can help you quickly label your datasets. Here is a case study on how Mindkosh helped a startup in the AV space massively reduce QC time and increase overall dataset quality. You can learn more about everything the Mindkosh annotation platform offers here.

Use cases in autonomous driving

Let’s take a look at where all this labelled data ends up — and how it powers the future of transportation.

Training robust machine learning models

At the heart of every AV system is a machine learning model. But no model is better than the data it’s trained on.

For the model to generalize well, the dataset must be:

- Diverse: Covering cities, suburbs, highways, and rural areas — in all weather and lighting conditions.

- Accurate: Mislabels lead to misinterpretation.

- Consistent: Uniform rules for edge cases, class definitions, and object boundaries.

High-quality annotations help these models:

- Detect and track objects in motion

- Understand depth and distance

- Predict trajectories

- Plan safe routes in real time

Read case study: Reducing QC time for large multi-modal datasets for autonomous vehicles

Establishing ground truth

“Ground truth” is the benchmark against which predictions are compared. It’s used to:

- Evaluate model accuracy

- Detect drifts and performance drops

- Drive continuous improvement

In simulation environments, ground truth data allows developers to test vehicle logic without physical testing. It’s the gold standard — and it all starts with accurate labeling.

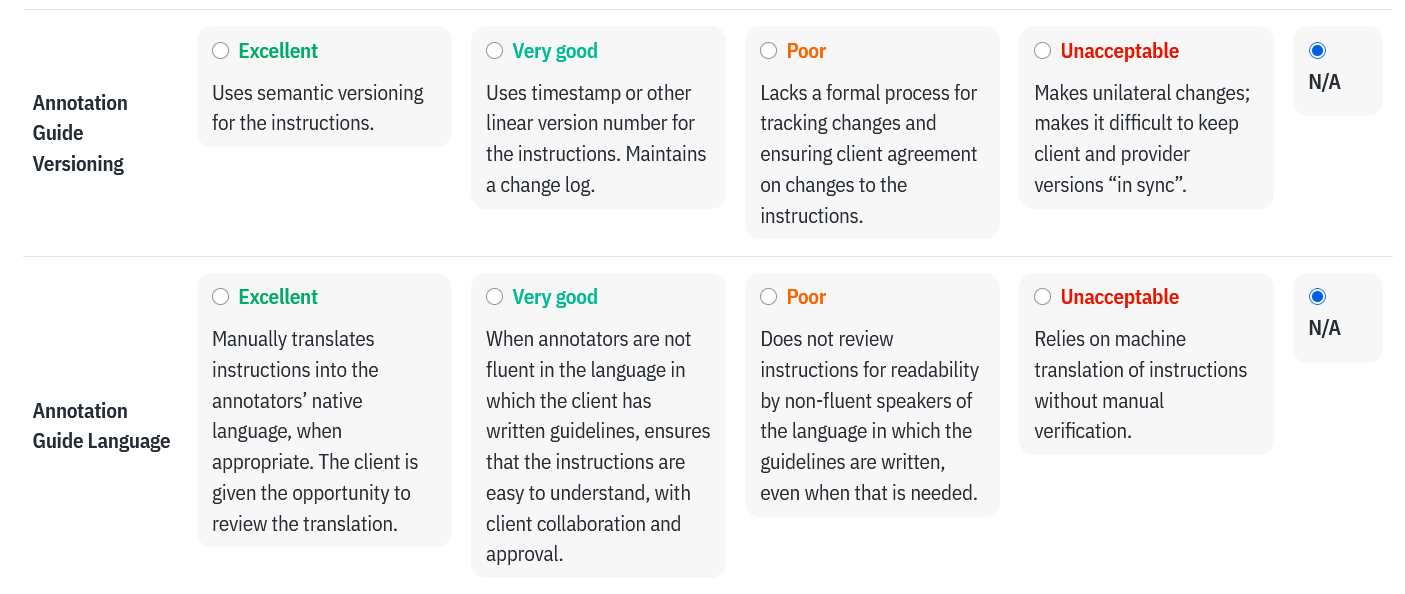

Outsourcing at scale

Building an in-house annotation team is resource-intensive. That’s why many companies outsource annotation to trusted partners.

The right vendor brings:

- Trained annotators familiar with AV standards

- Scalable infrastructure

- Proven QA processes

By outsourcing, AV developers can focus on model development while still ensuring their training data is best-in-class. But choosing the right vendor is not easy. There are plenty of choices, but not everyone can provide the scale and quality needed for your application. In addition, different annotation teams have different strengths - some might be good at Image segmentation, while some might not have the experience required to accurately label Multi-sensor data. To help you make the decision in an objective manner, we have built a vendor evaluation worksheet, which you can access here.

If you are looking to outsource annotation for your multi-sensor datasets, we offer complete annotation solutions that combine our industry leading annotation platform with a highly skilled and experienced workforce. You can learn about our solutions here.

Conclusion

In the race to deploy fully autonomous vehicles, it’s easy to focus on breakthroughs in AI or hardware. But the unsung hero of the AV revolution is data labeling.

- Without labelled data, there's no perception.

- Without perception, there's no autonomy.

- Without autonomy, there's no self-driving future.

Data labeling is what teaches AVs to "see" the world — to understand that a stop sign means stop, that a child on a bicycle is not just an object but a fragile life, and that a lane line isn’t just a stripe, but a boundary that can’t be crossed casually.

It’s painstaking work, but it’s foundational. And with the right tools, automation, and human oversight, it can scale — safely and reliably — to help AVs navigate not just roads, but complexity, chaos, and uncertainty.

So the next time you see a driver-less car cruising down the street, remember: behind its cool facade and cutting-edge tech lies millions of human decisions — every box drawn, every line traced, and every pixel tagged — that make that journey possible.