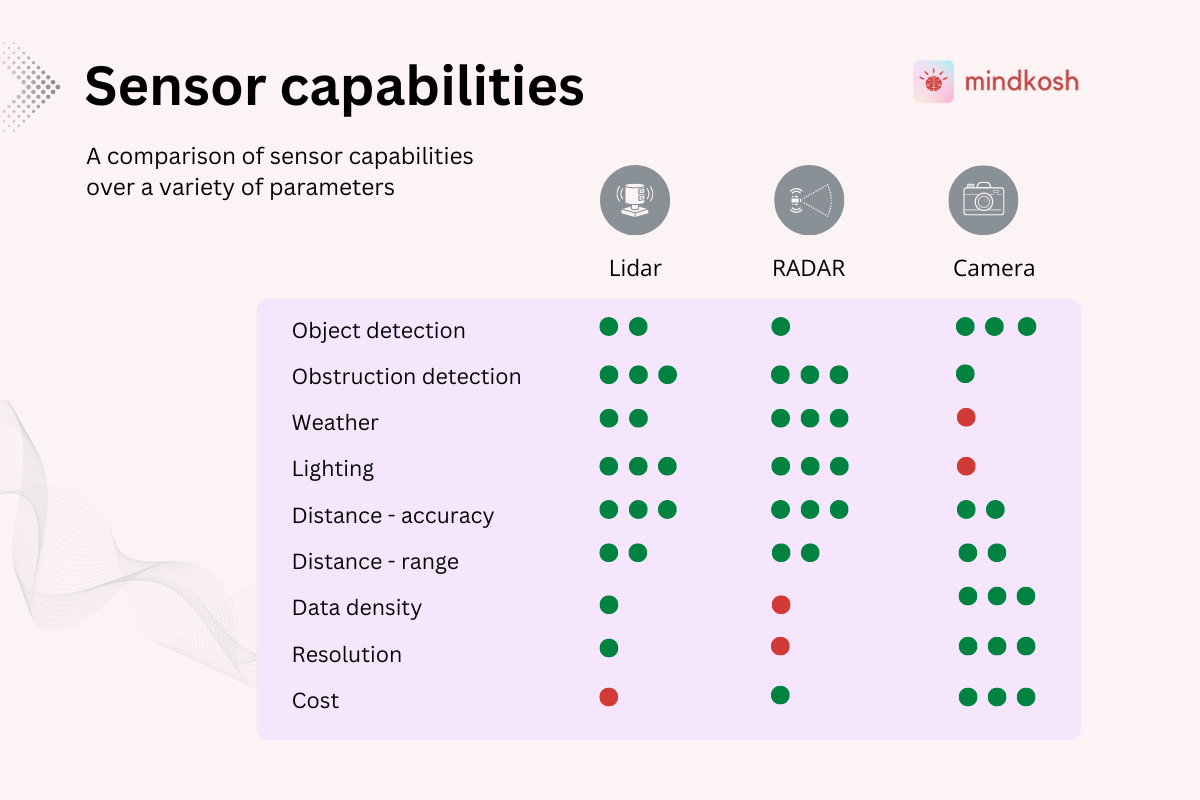

Individual sensors like Lidars and cameras, while offering powerful capabilities, also have certain limitations when used by themselves. In addition, since Lidars are often deployed in scenarios that require extra attention to safety, like Self driving cars and robotics, redundancy is a key requirement. This is where Sensor Fusion comes in.

Sensor fusion allows systems to combine data from multiple sensors with complementary capabilities, providing a more robust view of the environment. In the sections below, we dive deeper into what Sensor fusion is, why it is helpful, how it is done and where it is used.

What is LIDAR?

Lidar or Light Detection and Ranging is a remote sensing technology that measures distances by illuminating a target with laser light and analyzing the reflected light. It generates precise, three-dimensional information about the shape and surface characteristics of objects. Crucially, it provides accurate depth information by producing point clouds, where each point represents a distance measurement from the sensor to an object in the environment. Lidars come in a variety of different form factors and operational styles, each with their own advantages and challenges. You can learn more about how Lidars work here.

Advantages of Lidar over cameras

Lidars and cameras each have their own advantages. While cameras provide a rich colored representation of the world which can be easily processed, lidars offer several advantages over cameras. It is notable, however, that Lidars and camera are not exclusive - they are meant to be used in a complementary fashion to cover for each other's deficiencies and provide a robust & redundant perception system.

Depth Perception

The biggest advantage Lidar provides is accurate and reliable distance measurements to objects, creating detailed 3D maps of the environment. Cameras, on the other hand, require complex algorithms to estimate depth, which can be less accurate. Even when using depth sensor cameras, the depth measurement is not as accurate as that provided by a lidar sensor.

Performance in Low Light Conditions

Lidar operates independently of ambient light and can accurately detect objects in complete darkness. This can be a huge advantage in low-light conditions like those at night.

Precision and Accuracy

Lidar offers high precision and accuracy in detecting the shape, size, and position of objects. Since the objects are detected in 3-dimensions, unlike in cameras, complex algorithms are not needed to calculate these properties

Resistance to Visual Obstructions

Lidar can see through obstructions like fog, dust, and rain to a certain extent, providing reliable data in adverse weather conditions. Cameras can be significantly affected by these obstructions.

Wide Field of View

Lidar systems typically offer a 360-degree field of view, enabling comprehensive environmental scanning. Cameras usually have a limited field of view, requiring multiple units for full coverage. Without a lidar, it can also be difficult to combine data from different cameras into a single coherent world map.

What is Lidar Sensor Fusion?

Lidar sensor fusion is the process of integrating the collected Lidar data with data collected from other sensors such as cameras or RADAR systems. This fusion combines different aspects of the sensors allowing the system to overcome the limitations of one kind of sensor by using detection from another sensor, thus enabling better, more robust perception. Sensor fusion of Lidar sensors with cameras is the most commonly used configuration. However, sensor fusion could be applied to multiple Lidar sensors, RADARs as well as cameras.

Key advantages of Lidar Sensor Fusion

Redundancy

The key advantage provided by Lidar sensor fusion compared with a single sensor is redundancy. Even if one sensor is not able to detect an object, it is likely that at-least of the other sensors would be able to see the object. For e.g. cameras might not work properly in low light conditions, whereas lighting conditions have no bearing on the efficacy of Lidar sensors. Similarly while Lidar sensors are usually short ranged, cameras can see objects farther away.

Certainty

Even if an object can be seen by multiple sensors, the additional information allows the systems to be more accurate by combining data from all the sensors.

Contextual understanding

Integration of LIDAR depth information with scene-oriented information obtained from cameras allows for better scene perception.

How does Lidar sensor fusion work?

Lidar sensor fusion can be classified based on the level of abstraction at which the fusion occurs:

- Early Fusion

- Mid-Level Fusion

- Late Fusion

In the sections below we elaborate on each of these fusion methods

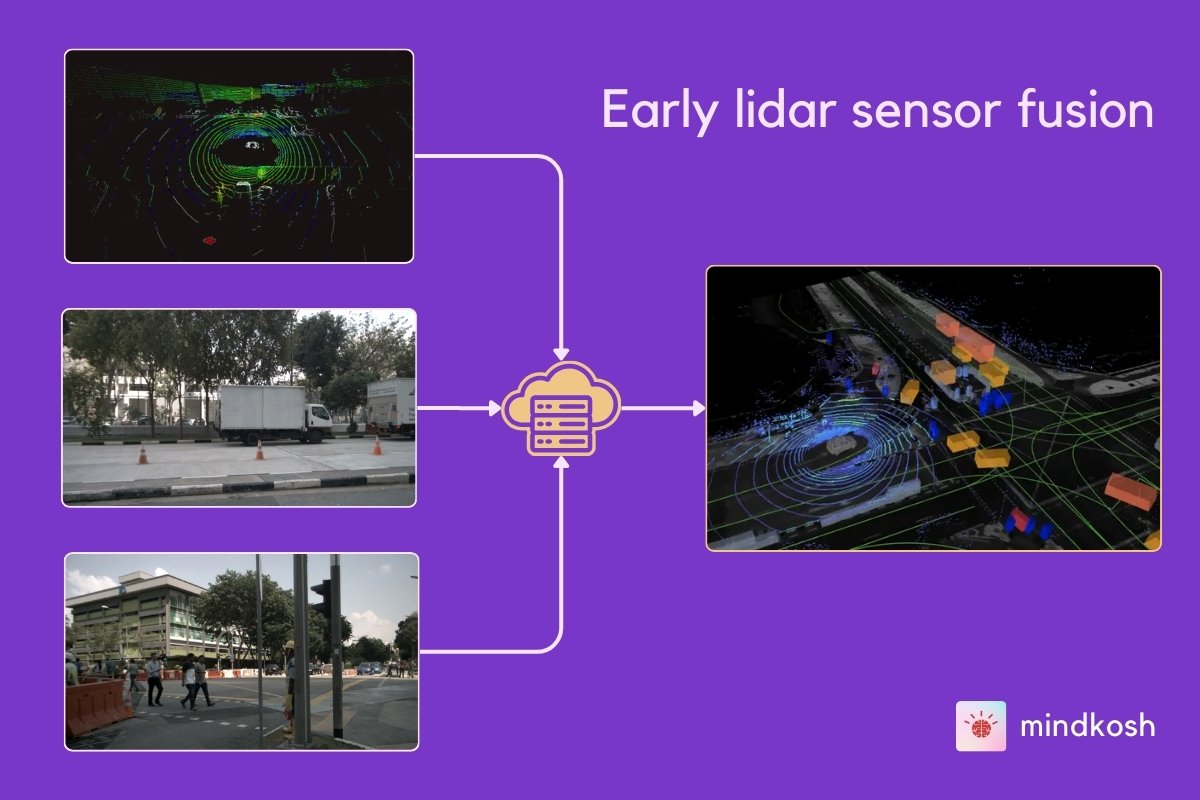

Early Lidar Sensor Fusion

In early sensor fusion raw data, such as Lidar point clouds and images from different cameras, is combined to create a unified dataset. A typical early sensor fusion pipeline looks something like this:

- Point Cloud Projection.

Points from a Lidar can be projected over camera images using the relative position of the lidar with different cameras, and the intrinsic camera parameters which define how the camera projects points from the real world onto the camera sensors. - Feature Extraction

Identify features such as edges or corners within the Lidar point cloud data as well as the camera images separately. - Data Association

Match corresponding features between the projected Lidar points and camera images to fuse the data.

Advantages of early sensor fusion

- Early sensor fusion produces a richer dataset with the jointly detected depth and color information.

- Because the features are present in multiple sensors, this enables higher level of accuracy of the detection of objects in the scene.

Challenges

- Matching sensor data and identifying features from the fused dataset can be computationally expensive.

- There is comparatively little literature available on Early sensor fusion, which means there are fewer libraries and algorithms available to perform this operation.

- Accurately matching data from different sensors depends on the accuracy of the extrinsic and intrinsic parameters of the cameras, which can be difficult to obtain.

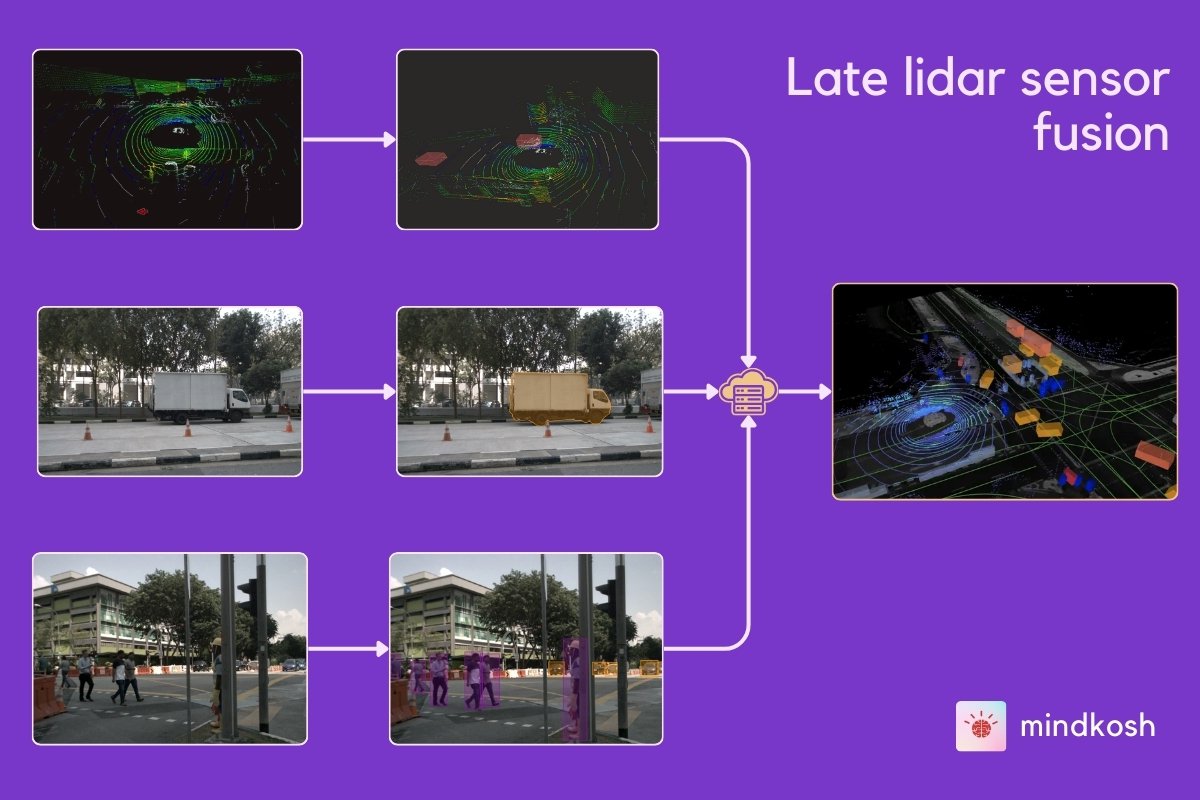

Late lidar sensor fusion

Late fusion combines the outputs of separate sensor processing pipelines into a final output. This involves the following steps:

- Independent Object Detection

Data from Lidar and each camera are processed individually to perform object detection and other Machine Learning activities. At this stage, the sensors are unaware of each other. - Result Integration

The output from each sensor's processing pipeline is matched based on criteria like spatial overlap (Intersection over Union, IoU) or object tracking over time, to produce the final merged predictions.

Advantages of early sensor fusion

- Lower computational burden compared to early fusion.

- Easier to implement and debug since the Machine Learning pipelines for each sensor are well developed.

Challenges

- Potential for missed associations between objects detected by different sensors.

- Requires robust algorithms for object matching. Matching objects from multiple sensors is not straightforward as it is difficult to figure which sensor's data should be trusted when the output from individual sensors does not match with each other.

Mid-level Lidar sensor fusion

Mid-level lidar sensor fusion strikes a balance between the early and late fusion approaches, offering a sweet spot for combining data from multiple sensors. In mid-level sensor fusion, raw data from individual sensors is processed independently to generate intermediate representations before being fused. This method captures detailed information while maintaining flexibility and efficiency.

The process has the following rough outline:

- Extract meaningful features from the Lidar data and other sensors, such as cameras or radar. For Lidar, this could mean identifying key points, edges, or surfaces in the 3D point cloud. Similarly, for camera data, features like edges, corners, and textures are identified. Each sensor processes its own data to highlight the most unique features of the scene.

- These extracted features are mapped onto a common reference frame. This is done through transformations of data from one sensor to another, to align the features spatially as well as temporally. We talk about transformation of one sensor data onto another in a later section below.

- These intermediate features in a common reference frame are then matches using Machine Learning models to create a unified representation. This combined representation retains the richness of the original sensor data while improving the robustness of the overall perception system. For instance, a neural network might be trained to take both Lidar and image features as input and produce a more accurate 3D reconstruction or object detection output.

Mid-level fusion is particularly useful in dynamic environments, where conditions can change rapidly, and different sensors might provide complementary information. By fusing intermediate features, the system can adapt more effectively to variations in lighting, weather, or object appearance. This adaptability and redundancy of sensor data can be extremely important for applications like autonomous driving, where the vehicle must interpret complex scenes and process new scenarios in real-time.

Another advantage of mid-level fusion is that it can be more computationally efficient than early fusion, which processes raw data individually, but more informative than late fusion, which combines high-level outputs. Thus mid-level sensor fusion provides a balanced solution to the sensor fusion problem.

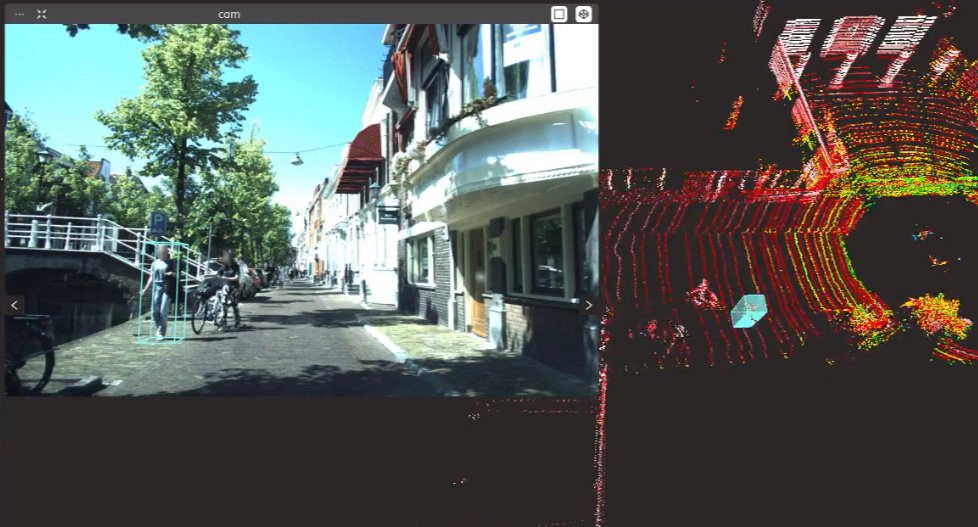

Projecting lidar points onto images

As mentioned in the sections above, early sensor fusion as well as mid-level sensor fusion requires projection of features and points in Lidar point clouds onto camera images. In fact, regardless of the sensor fusion approach, it can be beneficial to project labeled lidar objects onto images to either augment image labels or to measure the quality of the labels of individual sensors. In addition, this same approach can also be used to colorize a lidar point cloud that is otherwise not colored - we use colors from the images to add RGB values to the corresponding points in the point cloud.

This projection of lidar data onto images involves a 2-step process:

- Lidar and camera synchronization.

- Projection of points from lidar onto the image plane.

We describe each of them in detail in the section below

Lidar and camera data synchronization

Sensors collect data at different rates. For example, a Lidar device might capture point clouds at 15 Hz - that is- 15 samples per second while a camera might take images at 20 Hz - or 20FPS. So if we collected the data for 1 second, we would have 15 point clouds and 20 images for each camera. This means for at-least 5 images, we simply don't have the corresponding point cloud. This is why synchronizing Lidar and camera data is so important.

Another thing to note is that if the vehicle or robot carrying the lidar is moving quickly and synchronization is not done properly, the world described by a point cloud and its corresponding camera image can be vastly different, as they captured the environment at different points in time and space.

The synchronization itself can be carried out by matching timestamps of the point clouds and images to find out the data samples that match each other closely.

Projection of points from lidar onto images

Once we have time-synchronized frames of data from Lidar and cameras, we are ready to project the points. This is usually a 2-step process:

First, the Lidar and camera devices are carefully calibrated. This involves determining the exact position and orientation of the sensors relative to each other. Once calibrated, the Lidar points can be transformed into the camera's coordinate system using a combination of rotation and translation matrices. These parameters that describe the position of various sensors relative to each other are called extrinsic parameters. Note that if the lidar or cameras are moved relative to each other, these extrinsic parameters must be calculated again.

Next, each Lidar point (transformed into the camera's frame of reference) is projected onto the 2D image plane. This requires using the camera's intrinsic parameters, such as focal length and optical center, to accurately map the 3D coordinates onto the 2D pixel coordinates. Unlike extrinsic parameters, these camera parameters are inherent to the camera system and don't change regardless of their position. The result is a set of Lidar points overlaid onto the camera image, providing depth information for each point in the image.

In practice, this projection process needs to be very accurate in order to meet the real-time requirements of many applications, such as autonomous driving.

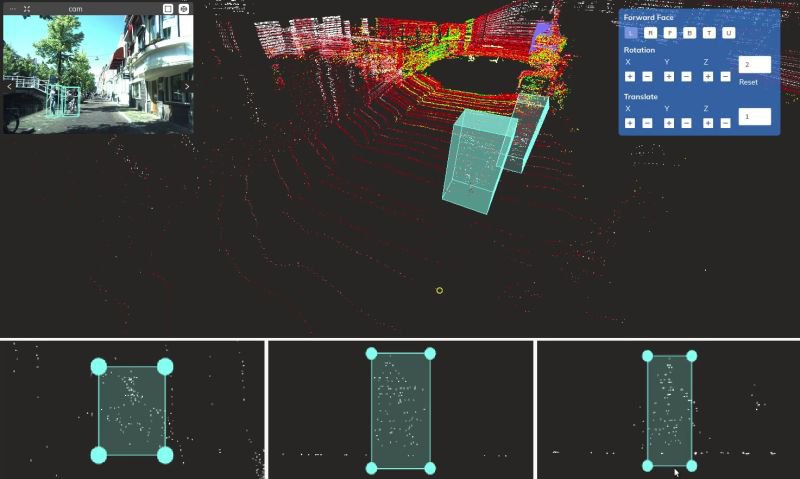

Annotating data for Sensor fusion

Annotating data for sensor fusion is more complicated than annotating individual sensor data like point clouds or images. Because Lidar point clouds can often be sparse - especially at distances away from the lidar device, labelers must have access to projected features from lidar onto images. This additional data from images provides them important context to identify objects in the point cloud. Doing this however, requires specially built tools to handle the multi-sensor setting. At the very least the annotation platform must be able to:

- Allow viewing the sensor data on the same interface, so labelers can have a better understanding of the environment.

- Project annotations from point cloud onto images, thereby providing important context when labeling objects.

- Maintain consistent instance IDs across time and sensors, so that we can identify an object as it moves across time and appears or disappears in multiple sensors.

The Mindkosh annotation platform covers all these points and many more to ensure sensor fusion annotation can be achieved with high accuracy. You can learn more about our sensor fusion annotation platform here.

Industrial applications of Lidar Sensor Fusion

Lidar sensor fusion is essential in various applications, particularly in autonomous vehicles and robotics

Autonomous Vehicles

- Lidar sensor fusion enhances the accuracy of object detection and tracking through the combination of multiple sensor data.

- By combining data from different, often complementary sensors, Lidar sensor fusion enhances safety by ensuring there are no blind spots.

Robotics

- Enables precise navigation and obstacle avoidance.

- Facilitates detailed 3D mapping and environment modelling.

- Supports complex tasks like picking and placing in industrial automation.

Augmented Reality (AR)

- Enhances spatial understanding and engagement with objects in the real physical environment.

- Helps to improve the accuracy of virtual objects positioning in applications using augmented reality.

Summary

- What is Lidar sensor fusion?

Lidar sensor fusion merges data from Lidar sensors with information from other sensors like cameras and RADARs to create a comprehensive understanding of the environment. - Why is Lidar sensor fusion essential?

It enhances perception accuracy by combining complementary data sources, improving the reliability and robustness of autonomous systems in various conditions. - How does Lidar sensor fusion work?

Fusion techniques can be early, mid-level, or late, depending on when and how data integration occurs during processing, tailored to specific application requirements . - What are the primary benefits of Lidar sensor fusion?

- Precision: Enables accurate distance measurement and object detection.

- Redundancy: Enhances security by using redundant data source.

- Holistic Perception: Integrates depth and contextual information for a comprehensive view of the scene.

- Where is Lidar sensor fusion used?

Lidar sensor fusion is commonly employed in autonomous vehicles, robotics, and augmented reality systems for tasks such as navigation, obstacle avoidance, and environment mapping.