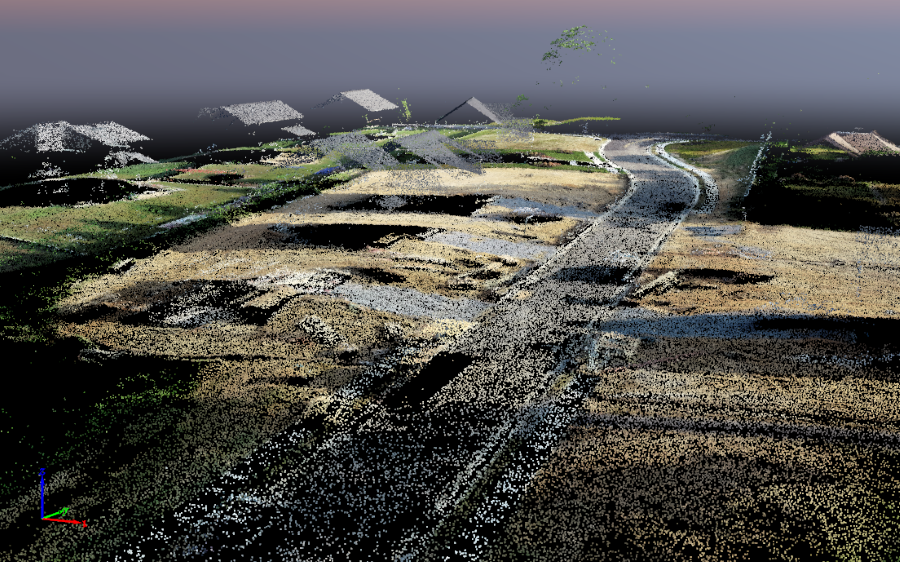

Imagine capturing the real world — every twist of a mountain trail, every brick in a century-old building, every corner of a busy city street — not in pictures, but as millions of tiny, precise 3D points. That’s the magic of point cloud data.

These 3D point clouds, typically captured using LiDAR sensors, drones, or terrestrial scanners, allow us to digitally recreate physical environments with stunning detail.

But while point cloud data opens the door to innovation, walking through it isn’t always easy. Point cloud analysis has some common problems — problems that can silently derail your entire workflow if they are not handled properly. Missed measurements, bad segmentation, misidentified features and the list continues further.

In this blog, we will look into the true difficulties associated with working with point clouds, try to unpack the reasons behind those challenges, and provide you with practical methods to effectively counter those challenges. This blog aims to prepare novices and advanced users alike by reinforcing knowledge of impact and mitigating risks in point cloud operations.

Understanding Point Cloud data

Before we dig into what can go wrong, let’s first lay the foundation: What exactly is point cloud data?

At its simplest, a point cloud is a collection of data points in 3D space. Each point has a position (X, Y, Z) and may also carry additional information like color, intensity, reflectivity, or timestamp. Together, these points represent the external surface of an object or scene.

Point clouds are commonly produced through the techniques:

- LiDAR (Light Detection and Ranging): A remote sensing technique that uses a laser beam to measure distances.

- Terrestrial laser scanners: These are ground based instruments for surveying, construction, and monument works.

- Photogrammetry from airplanes/UAVs/drones: Obtaining imagery for the purpose of modeling three dimensional regions.

Such raw datasets are very abundant, rich in detail, but at the same time complex, incomplete and hard to decipher without additional steps taken for refinement.

Scan datum and control points

Alignment is by far the most troublesome step in the point cloud processing sequence, and is often exacerbated by multiple scans needing to be combined into a singular unified scan.

Each point cloud scan is composed of split-merge datums or origin points, each containing anchor control points that enable their attachment to a real-world coordinate system. In the case of misalignment of scan datums or poor control point selection, there is the risk of your entire dataset drifting or warping. This may pose a huge issue for large scale reconstructions like mapping a city accurately or infrastructural inspection.

Common pitfalls in Point cloud analysis

Let’s review a few sticking points that most projects tend to encounter. Here is a list of common problems in point cloud analysis that we will focus on.

1. Point density and data 1uality

Consider point cloud density to like pixel resolution in a photograph; too low and you lose detail, too high and the file becomes cumbersome to work with.

Features such as sharp edges, small objects, or narrow gaps might crucially be missing in over low dense point clouds.

On the other hand, overly dense clouds slow down analysis, consume excess memory, and decrease overall performance.

The quality of the raw data is just as important. Data that is noisy, due to rain, moving objects, or reflective surfaces can create ghost points and obscure vital geometry.

Best Practice: Aim for a balanced density that is optimized for the task. Clean the noisy data and eliminate outliers using filters to improve analysis.

2. Poor segmentation

Segmentation is about dividing the point cloud into meaningful parts such as separating a road from a building or identifying trees versus traffic lights.

Real world scenes are blurred with shadows, hidden parts, scanning errors and complex geometries. Poor segmentation algorithms can mishandle objects by lumping them together or splitting them in strange ways, which can result in incorrect analysis or downstream model failures.

For example, if the segmentation is not accurate, a self-driving car could mistake a street sign for part of an adjacent tree.

Recommendation: Use deep learning-based segmentation models and fuse data from multiple sensors for better accuracy.

3. Choosing the right algorithm for the task

Point cloud analysis doesn’t follow a general rule. The algorithm used to detect tree canopy in an environmental study does not work for potholes identification along highways.

However, it is rather common for people to make this mistake: applying off-the-shelf algorithms to very specific tasks.

Using the wrong algorithm may result into bad performance of your software; misinterpretation of features; missed opportunities for optimization.

Ask yourself:

- Is your application real-time (e.g., autonomous driving) or offline?

- Are you working with indoor environments or wide-open outdoor scenes?

- Is your use case precision-critical (e.g., surveying) or more interpretive (e.g., gaming or VR)?

Tip: Always match your algorithm to the task, and validate it on representative datasets before scaling up.

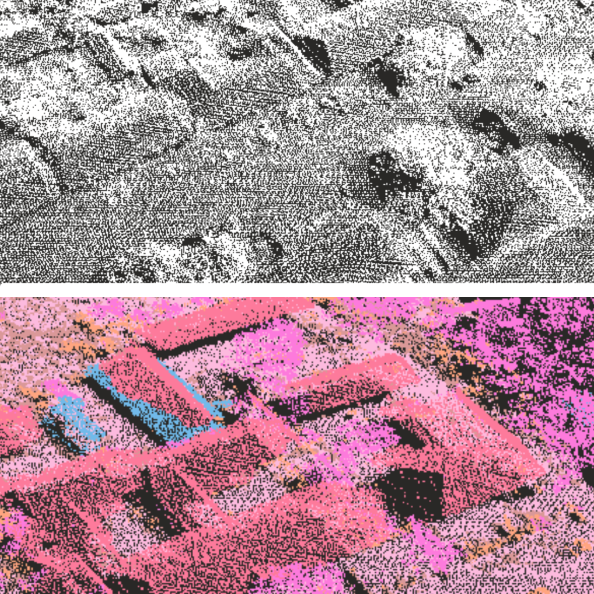

4. Semantic segmentation and misclassification

Semantic segmentation goes a step beyond simple segmentation — it labels every point in the cloud with a category: road, pedestrian, car, pole, vegetation, etc.

But even powerful AI models can get confused. Points that are spatially close but semantically different (like a person next to a wall) can be misclassified due to noise, incomplete data, or lack of contextual understanding.

Misclassification is more than a nuisance — it can be dangerous. For example, a car misclassifying a child as a signpost could be catastrophic.

Fix: Train models on diverse, high-quality annotated datasets. Combine LiDAR with camera input (sensor fusion) for better semantic accuracy.

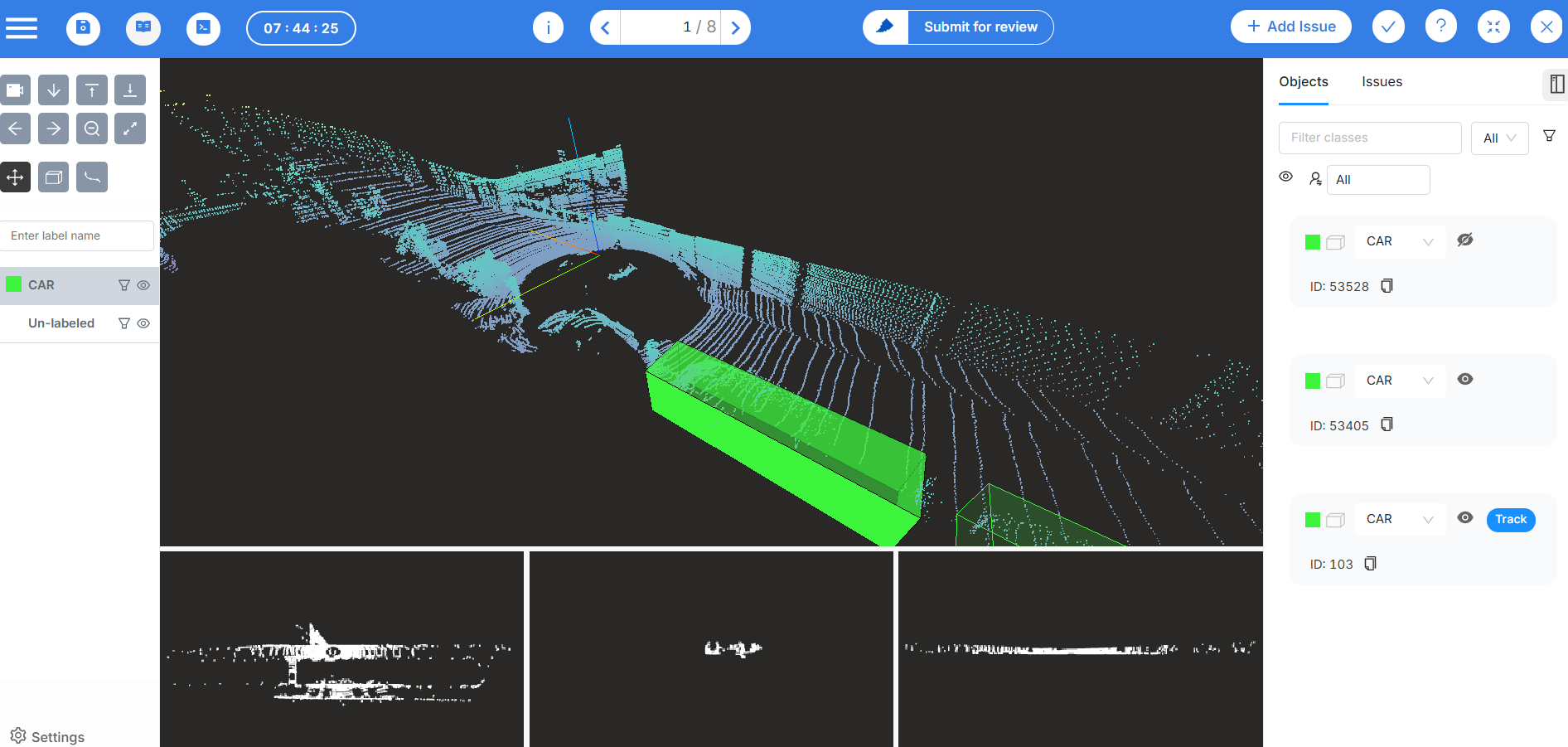

5. Pitfalls in point cloud annotation

Every AI model is only as good as the data it's trained on — and that starts with annotation. Here are three critical annotation challenges:

- Labeling Accuracy: Inconsistent or sloppy labeling can confuse the model and degrade performance.

- Sparse or Noisy Data: Annotating sparse or overlapping objects is tedious and error-prone.

- Dataset Consistency: When multiple annotators label data without standard guidelines, you end up with a fragmented training set.

Pro Tip: Build a strong annotation pipeline with QA checks, annotator training, and automated tools for consistency.

Try Mindkosh's Smart Annotation Tools — you can automate, review, and validate annotations faster with our powerful LiDAR and 3D annotation features.

Methods and techniques to overcome pitfalls

Now for the good news — every pitfall we discussed has a solution. Let’s explore how to steer clear of trouble and enhance the value of your point cloud data.

1. Advanced filtering & algorithm selection

Start by investing in pre-processing filters that clean up raw point clouds. This can include:

- Outlier removal

- Statistical noise filtering

- Smoothing

- Downsampling

From there, use task-specific algorithms for segmentation, feature extraction, or modeling. Combine heuristic and learning-based methods for best results.

Hybrid approaches often work best.

For instance:

- Use a rule-based method to identify ground points

- Follow up with a neural network to classify complex structures above the ground

2. Leveraging LiDAR and smart sensors

Not all sensors are created equal. Modern LiDAR sensors offer millimeter-level accuracy, multiple returns, and colorized depth perception.

Choose sensors based on:

- Required range and resolution

- Environmental conditions (e.g., rain, fog)

- Frame rate and refresh cycle

And don’t forget calibration! Misaligned sensors can skew your data dramatically.

Pairing LiDAR with RGB cameras, IMUs, or GNSS can create rich, contextualized point clouds that improve both accuracy and interpretability.

3. Real-time processing for autonomous systems

In autonomous driving or robotics, point clouds need to be processed in milliseconds. This requires:

- Optimized, low-latency algorithms

- GPU acceleration

- Parallel computing

Libraries like PCL (Point Cloud Library) and frameworks like ROS (Robot Operating System) offer robust tools for building real-time applications.

Incorporate sliding windows, voxel grids, or region-growing methods for on-the-fly object detection and tracking.

4. Smart annotation tools

To overcome annotation fatigue and inconsistency, use:

- Semi-automated labeling powered by AI

- Interpolation across frames in dynamic scenes

- Template-based annotation for repeating patterns

- QA dashboards to ensure cross-labeler consistency

The goal is to make annotation faster, smarter, and more accurate — not a bottleneck in your pipeline. Check out Mindkosh's Lidar Annotation tool.

Conclusion

Point cloud analysis is one of the most powerful tools at our disposal for understanding and interacting with the 3D world. From autonomous navigation to digital twins, the possibilities are vast — but only if you navigate the challenges with care.

As technology matures and our use cases grow more complex, so too must our approach to point cloud analysis. Precision, adaptability, and context-awareness are key.

If you’re working with 3D data — whether in mobility, construction, surveillance, or virtual reality — treat your point clouds with the care they deserve. Recognize the pitfalls, apply best practices, and keep pushing forward.

After all, in a world increasingly shaped by digital environments, every point counts.

Point clouds may be messy, but with the right tools and workflows — like Mindkosh’s LiDAR annotation platform — every point becomes a powerful data source.