Introduction

Point cloud object detection is transforming how machines interpret 3D environments. This guide explores key aspects of point cloud object detection, including foundational concepts, methods, and future trends.

What are Point Clouds?

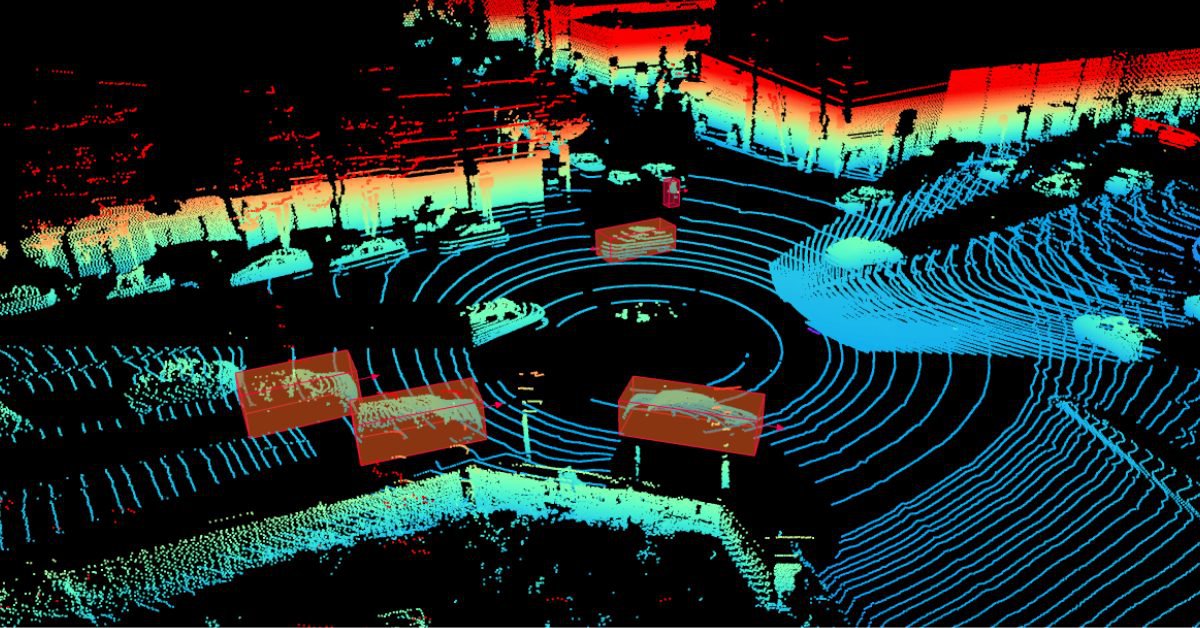

Point clouds are data points defined in 3D space, representing the surfaces and contours of objects. Collected through technologies like LiDAR, these points provide valuable spatial information about an object's shape and location. You can learn more about point clouds here.

Why is Point Cloud Object Detection Important?

The ability to accurately detect objects within point clouds is critical for applications that require spatial awareness, including:

- Autonomous Driving: Enhances vehicle navigation and obstacle avoidance.

- Robotics: Facilitates object manipulation in dynamic environments.

- Augmented Reality (AR): Improves depth perception for immersive user experiences.

- Computer Vision: Enables comprehensive 3D scene understanding.

Acquisition Methods

Point clouds are commonly acquired using:

- LiDAR (Light Detection and Ranging): Provides high-precision data with long-range capabilities. Lidar point clouds are usually dense making them suitable for object detection.

- Depth Cameras: Capture depth information, suitable for indoor mapping.

- Stereo Vision: Uses two cameras for depth estimation, effective for mobile devices and AR.

Challenges and Limitations of Point Cloud Data

Challenges with point cloud data include sparsity, noise, and high computational demands, especially for real-time applications. Robust detection algorithms help manage these complexities, ensuring accurate object identification.

Calculating point cloud features

Point Descriptors

Fast Point Feature Histograms

FPFH is an optimized, lightweight version of the Point Feature Histogram (PFH). It quickly captures local surface properties by computing pairwise geometric relationships between neighboring points, reducing computational complexity. It’s ideal for real-time applications where speed is critical.

Point Feature Histograms

PFH is a robust 3D feature descriptor that characterizes local geometries in point cloud data. It computes the spatial relationships between each point and its neighbors, making it suitable for tasks like object recognition but with a high computational cost.

Feature Extraction Techniques

Max Pooling

Max pooling is a common feature extraction method for point clouds where the maximum value across a set of features (e.g., from neighboring points) is considered the representative value of that set. This reduces dimentionality of the data while retaining the most prominent features, making it efficient for capturing critical structures.

Multi-Patch Feature Extraction

Multi-patch feature extraction divides a point cloud into multiple overlapping patches, then extracts features from each patch. This method enhances local detail capture and is effective for modeling complex geometries, as it considers various local contexts in the point cloud.

Point Cloud object detection methods

Traditional Methods

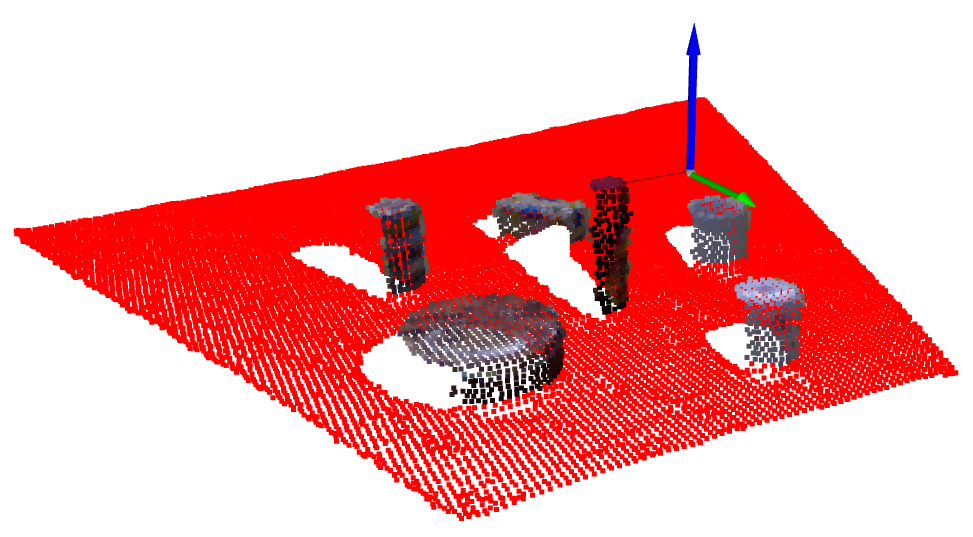

Traditional methods for performing object detection of point clouds use deterministic algorithms to partition the point cloud into different sections. The two most widely algorithms are:

RANSAC (Random Sample Consensus)

RANSAC is an iterative method used to detect shapes within point clouds by fitting geometric models, such as planes or cylinders, while discarding outliers. By randomly sampling subsets of points and identifying the best-fitting model, RANSAC is robust for noisy data and works well in real-world scenarios. RANSAC is widely used for detecting ground points in point clouds.

Hough Transform

The Hough Transform detects shapes by transforming point cloud data into a parameter space, where shapes (e.g., lines, circles) correspond to peaks in the transformed space. It’s effective for detecting specific geometric patterns and is especially useful for structured scenes, though computationally intensive for complex shapes.

Deep Learning-based Methods

Modern object detection methods leverage deep learning for better accuracy and scalability:

- PointNet: Processes unordered point sets directly, pioneering deep learning for point clouds.

- VoxelNet: Combines voxel representation and convolutional networks for 3D detection.

- PointRCNN: Region-based Convolutional Neural Network (R-CNN) architecture, precise in object localization.

Comparison and Evaluation of Different Methods

Factors like accuracy, computational complexity, and robustness against noise are essential when comparing detection methods. PointRCNN, for example, excels in precision but may require more computational power compared to PointNet.

Challenges and considerations

Noise and outliers in Point Clouds

Handling noise is critical for reliable detection. Preprocessing techniques, such as filtering and normalization, reduce outliers, improving data quality for detection.

Occlusion and clutter

Point clouds often contain overlapping objects, challenging algorithms to separate and identify individual items. Sophisticated techniques, like multi-view fusion, help address these complexities.

Computational complexity

Processing large datasets in real time requires efficient algorithms. Solutions like down-sampling and data compression help manage computational loads without compromising accuracy.

Evaluation Metrics

Performance is typically measured using metrics like:

- Intersection over Union (IoU): Measures accuracy by looking at how much overlap there is between two objects.

- Precision - Precision measures the accuracy of positive predictions - it is the proportion of true positives out of all positive predictions. Thus, high precision means fewer false positives.

- Recall - Recall, on the other hand, measures the model’s ability to find all relevant instances. It is the proportion of true positives out of all actual positives. High recall means fewer false negatives.

Future trends and research directions

Novel Deep Learning architectures

Emerging architectures aim to improve efficiency in processing point clouds, especially critical for resource-limited devices like drones.

Real-time Point Cloud processing

With applications in autonomous driving, research focuses on reducing latency and improving processing speeds for instantaneous decision-making.

Multi-modal Fusion

Integrating point clouds with visual data enhances spatial understanding, creating richer representations for detection algorithms. You can read a more detailed analysis of multi-sensor fusion here.