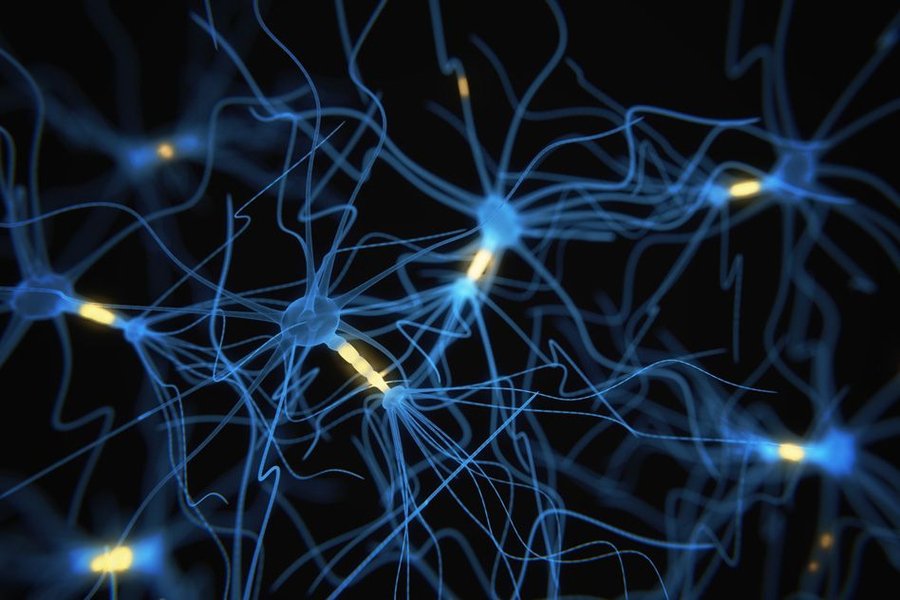

Although the current generation of Neural Networks (usually called 2nd generation) has allowed us to make breakthrough progress in many fields, these networks are biologically in-accurate. Models of cortical hierarchies from neuroscience have strongly inspired the architectural principles behind DNNs. But at the implementation level, only marginal similarities between brain-like computation and Artificial Neural Networks (ANNs) as used in AI applications, can be recognized.

One obvious difference is that neurons in ANNs are mostly non-linear but continuous function approximators that operate on a common clock cycle, whereas biological neurons compute with asynchronous spikes that signal the occurrence of some characteristic event by digital and temporally precise action potentials.

What are Spiking Neural Networks?

The 3rd generation of neural networks, Spiking Neural Networks or SNNs, aims to bridge the gap between neuroscience and machine learning, using biologically-realistic models of neurons to carry out computation. The promise of SNNs results from their favorable properties exhibited in real neural circuits like brains, such as analog computation, low power consumption, fast inference, event-driven processing, online learning, and massive parallelism. With event-based vision and audio sensors becoming more and more mature each day, deep SNNs are one of the most promising concepts for processing such inputs efficiently.

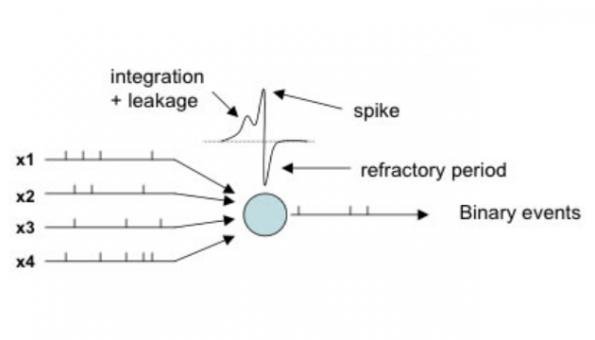

A spiking neural network (SNN) is fundamentally different from the neural networks the machine learning community is familiar with. SNNs operate using spikes, which are discrete events that take place at specific points in time, rather than using continuous values. The occurrence of a spike is determined by differential equations that represent various biological processes, the most important of which is the membrane potential of the neuron. Essentially, once a neuron reaches a certain potential, it spikes, and the potential of that neuron is reset.

The Leaky Integrate and Fire model

The most common model used to model this behavior is the Leaky integrate-and-fire (LIF) model. In essence, this model states that a neuron combines inputs (spikes) from other neurons through its dendrites, and if the cumulative potential is beyond a threshold, it fires. Information in this model is encoded through spikes. By looking at the frequency of spikes, the time between each spike and some other characteristics of the spikes, a lot of information can be encoded within just a few spikes. In fact you could theoretically encode binary information in these spikes by either sending or not sending a spike at a specific interval.

At first glance, this may seem like a step backwards. We have moved from continuous outputs in ANNs to discrete ones spread through time, and these spike trains are not very interpretable. However, spike trains offer us enhanced ability to process spatio-temporal data, or in other words, real-world sensory data. The spatial aspect refers to the fact that neurons are only connected to neurons local to them, so these inherently process chunks of the input separately (similar to how a CNN would using a filter). The temporal aspect refers to the fact that spike trains occur over time, so what we lose in binary encoding, we gain in the temporal information of the spikes. This allows us to naturally process temporal data without the extra complexity that RNNs add. It has been proven, in fact, that spiking neurons are fundamentally more powerful computational units than traditional artificial neurons.

Advantages offer by SNNs over ANNs

- Energy efficiency

With the advent of LLMs, we are seeing how large the energy consumption of Machine learning models can be. SNNs only transmit information when necessary, potentially leading to lower power consumption compared to ANNs that require constant calculations. - Real-time processing

SNNs can process information as it arrives, similar to how brains handle sensory data. To understand this more clearly, think about how a typical speech recognition system works today - a sequence of the speech is passed through a Deep learning network, which outputs the textual content of the entire audio clip. Such a setup cannot process speech in real-time however, as it needs a chunk of audio to process it at once. In contrast, SNNs can process the audio as it streams through the network. - Dynamic behavior

SNNs can adapt to changing inputs in real-time due to the temporal nature of spike coding. This allows them to be potentially more robust than their ANN counterparts.

So why have we not seen widespread adoption for SNNs yet?

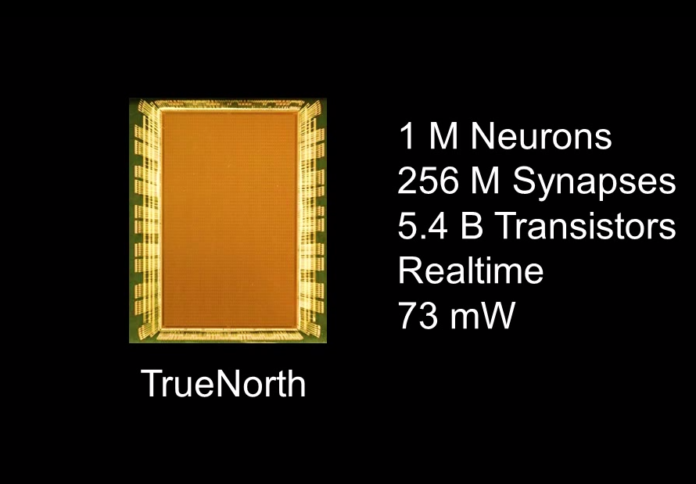

The main issue is that of training a SNN. Neurobiologically speaking, we have unsupervised learning methods such as Hebbian learning and STDP (Neurons that fire together wire together), but no known effective supervised training methods for SNNs that offer higher performance than 2nd generation networks. Since spike trains are not differentiable, we cannot train SNNs using gradient descent without losing the precise temporal information in spike trains. Therefore, in order to properly use SNNs for real-world tasks, we need to develop an effective supervised learning method. This is a very difficult task, as doing so would involve determining how the human brain actually learns, given the biological realism in these networks. Another issue, that we might be close to solving, is that simulating SNNs on normal computer hardware is very computationally-intensive, since it requires simulating differential equations. However, Neuromorphic hardware such as IBM?s TrueNorth aims to solve this by simulating neurons using specialized hardware that can take advantage of the discrete and sparse nature of neuronal spiking behavior.

SNNs are an interesting class of Neural networks, even if they are not practically useful right now. How these networks behave can give us important insights into how our brain actually works. Our brain is still the most efficient computation machine known to mankind. Uncovering its secrets can help us advance Artificial Intelligence to new horizons.